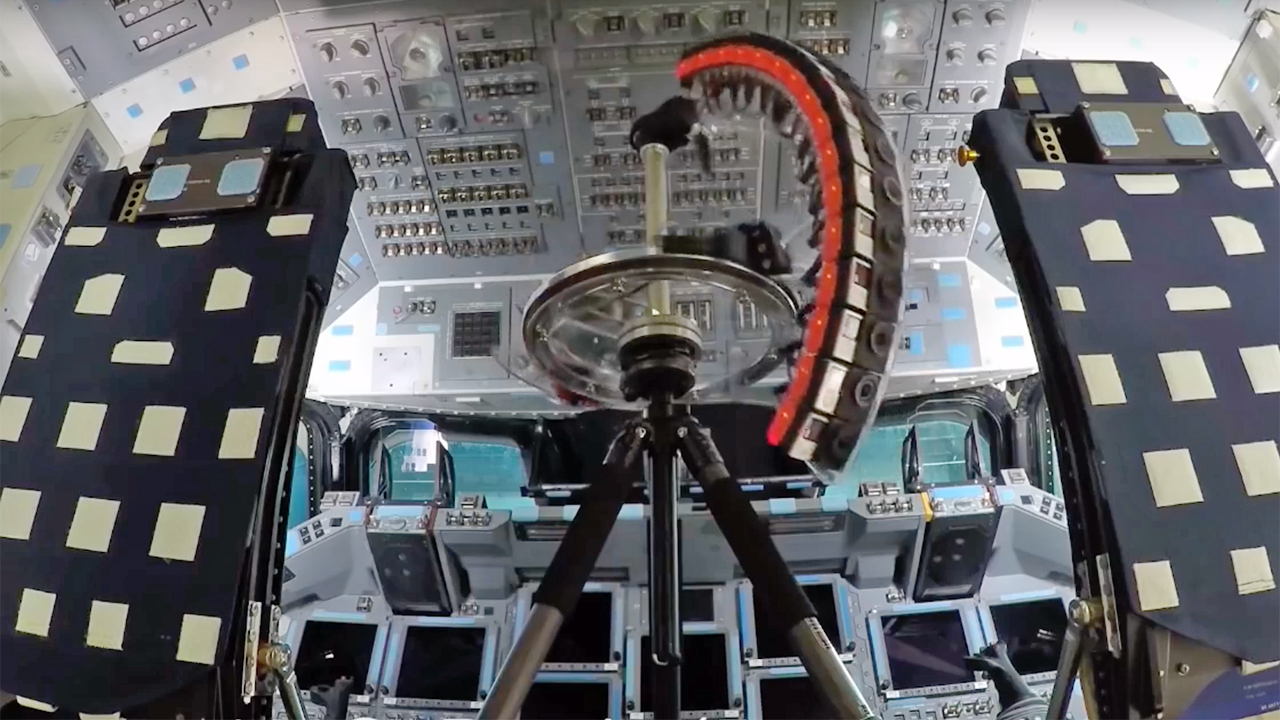

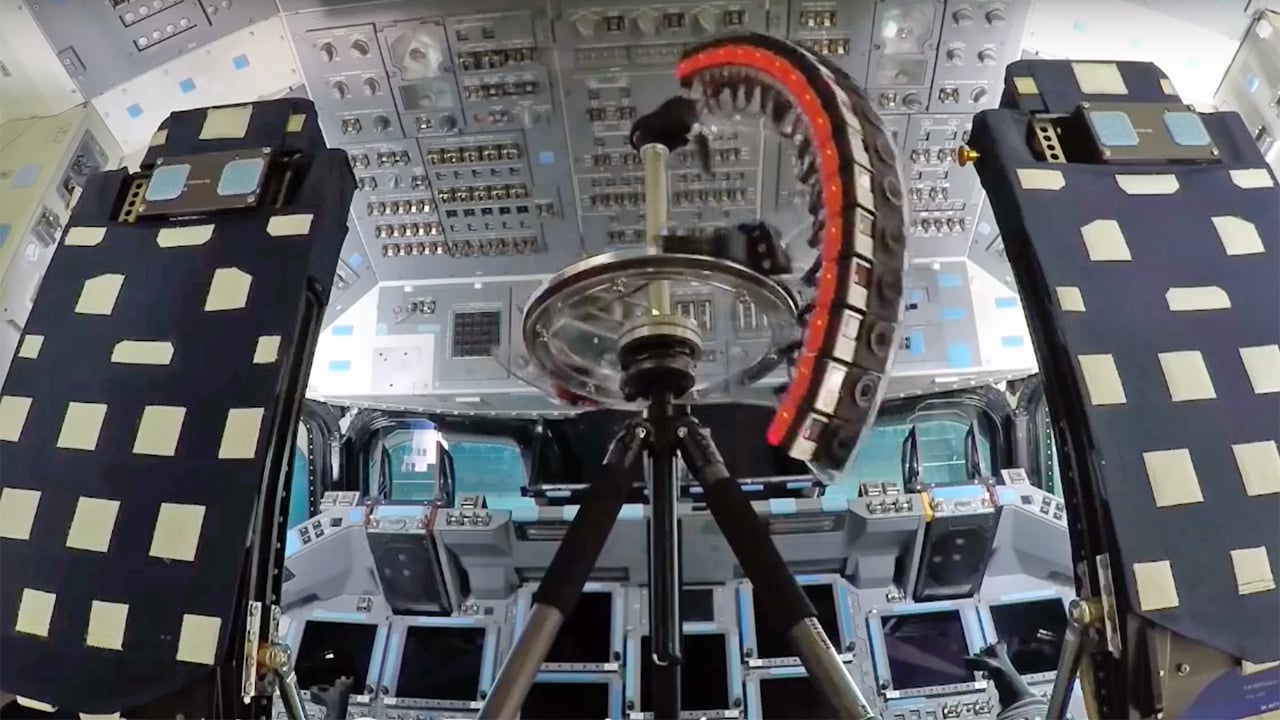

An array of GoPro cameras used to capture a lightfield based VR environment

An array of GoPro cameras used to capture a lightfield based VR environment

While Lytro may be no more, lightfields are still a big, and very useful field of research. And this latest system from Google aims to transform the way VR is captured.

Ordinarily, a simple 360-degree photograph for VR viewing wouldn't be particularly interesting. Various refinements have been made to the technique, including stereoscopy for something approaching depth perception and various approaches to avoiding the warping and distortion of images that are wrapped into a sphere. In the end, it's still a fixed viewpoint, though, and there are still issues with warping and if the user's eyes aren't precisely level, as there are with most implementations of stereoscopy to date.

One solution comes from Paul Debevec, whose name comes up often in the world of computer graphics. Debevec is a scientist at Google and adjunct research professor at the University of Southern California Institute for Creative Technologies. He first came to prominence in 1997 with The Campanile Movie, a thesis research piece which demonstrates photogrammetry, where a three-dimensional model of a scene is created based on the automatic analysis of two-dimensional photography.

Twenty years later, photogrammetry is what makes Google Earth's 3D representation of major cities and landmarks possible. Repeated passes by camera aircraft provide not only overhead but also four oblique angles. With a series of photos taken on each pass and the separation of the passes controlled, the resulting images cover the entire city from at least five angles. There's a huge amount of post-processing involved to remove distortions and correct tiny errors in the position of the cameras, but it's enough for a reasonably accurate 3D reconstruction of the city to be made. It's often not quite perfect with organic objects such as trees which are particularly tough to get right, but the fact that we can fly around cities on Google Earth is down to photogrammetry. The principles of recovering 3D positioning from the knowledge of perspective go back to the late 1490s with the work of Leonardo da Vinci or at least Guido Hauck in the 1880s, though Debevec can be credited with greatly popularising the idea.

Given Debevec's background and his recent position as vice-president for the entire ACM Siggraph organisation, it's no surprise to find him presenting at Siggraph 2018. Debevec took the stage with colleague Ryan Overbeck to discuss Welcome to Lightfields, which, perhaps unusually for Siggraph, is a piece of software that's already available on Steam. It's almost game-like, and you'll need a VR headset of some kind since the purpose of the project is to extend the concept of lightfield arrays to create 360-degree environments with a full six degrees of freedom.

Lightfields

Debevec and Overbeck's work is essentially an implementation of the same sort of lightfield technology made famous by the German research institute Fraunhofer. Lightfields are a powerful technology, though so far they've mainly been proposed as an evolution of conventional film and TV camerawork. The ideas shown in the Siggraph presentation can be described simply: take a lightfield array and form it into a sphere with the cameras facing outward, and it becomes possible to calculate a point of view from anywhere inside that sphere. As with other lightfield-based systems, it's quite possible to calculate both of the eyes of a stereoscopic view for display on a VR headset and the user is free to roam anywhere inside the sphere.

The actual apparatus used to capture these still VR environments is not a huge sphere of cameras. Instead, an almost semicircular arc of 16 GoPro Odyssey cameras is made to rotate on a vertical axis, so that it takes less than a minute and a half to record a spherical lightfield of a location. They've also built another rig using two Sony A6500 cameras which does, as we might expect, a rather better job over half an hour or so. The video is well worth a watch because it makes clear just how much better this technique works than the prior art. A lot of VR spheres feel like sitting inside a texture mapped sphere. With the A6500 rig, the option to shoot three-exposure HDR means that light can glow through transparent objects as the viewer's point of view changes in a way that's impossible, or at least very uncommon, with existing techniques.

The obvious question is whether this can ever be made to work for moving images. Right at the end, some very early prototype moving image material is shown, though Debevec and Overbeck constantly emphasise their dedication to doing this properly – at high frame rates, and without artefacts – so they're understandably not keen to push something that isn't quite finished. Audience questions were asked about the possibility of moving from sphere to sphere and therefore having complete freedom to roam within a space. Perhaps the next generation of video games can take place inside an almost photorealistic representation of a real space.

Try Welcome to Lightfields for yourself.

Maybe next year, eh?

Tags: VR & AR

Comments