The choice of GPUs on the market is bewildering. Here's Phil Rhodes to help guide you to the other side of the forest.

Read parts 1, 2, and 3 of this series.

In 2019, most people are aware of the debt owed by filmmaking (and several other fields) to video games. Companies like Sega have been putting together massive PCBs full of silicon to render game graphics since the 80s, but it wasn’t until the era of home gaming, loosely called the sixth generation, that the potential of games hardware to do other useful work became obvious. Or, to be fair, when the PlayStation 2 was released, it was immediately pretty clear that the thing was, effectively, grading its own output.

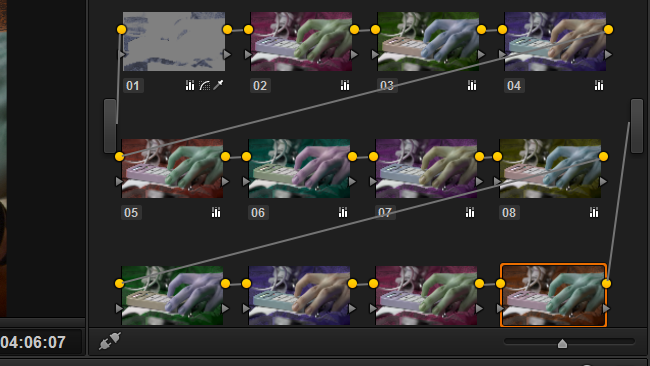

Resolve. If I had a picture of a Playstation 2, I'd use it

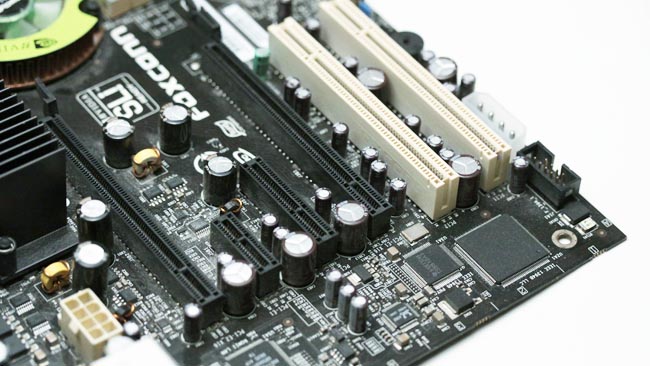

That is the genesis (no pun intended) of modern GPUs. Installing one in a modern workstation is easy: stick it into the first available slot nearest the CPU and connect any power cables that will fit.

Picking a product, however, is a bit more complicated.

This is the GTX 980 we reviewed years ago. Shockingly, it still does quite well, so the much more advanced 2080 is likely to be more than most people need

How Many

A lot of workstation vendors like to sell dual-GPU setups with two big graphics cards – something like an Nvidia RTX 2080 or AMD Radeon RX 5700 (exactly which, if any, AMD GPU directly equates to any Nvidia GPU is a complicated question with no exact answer). It is true that many Resolve workstations do well with two GPUs, mainly because it can (slightly) improve performance to separate the GPU used for number crunching from the GPU used for generating the desktop display.

However, that doesn’t mean that a Resolve machine necessarily needs two identical GPUs. It’s often good to have a simple, less costly GPU to generate the desktop display and reserve a high-power one for number crunching – that one won’t be connected to a monitor; it’s just there to be a computing device. Beyond that, generally, the reason to add more high-powered GPUs is that the workload demands more power, not specifically because there’s a huge workload on one particular GPU on the basis it’s generating the screen display. That one is likely to be least-exercised.

Also, if we’re specifically thinking about Resolve, bear in mind the limitations on the number of GPUs that are supported by the different editions.

Generating a waveform display is surprisingly hard work given the conceptual simplicity of it

Pick a brand and a range

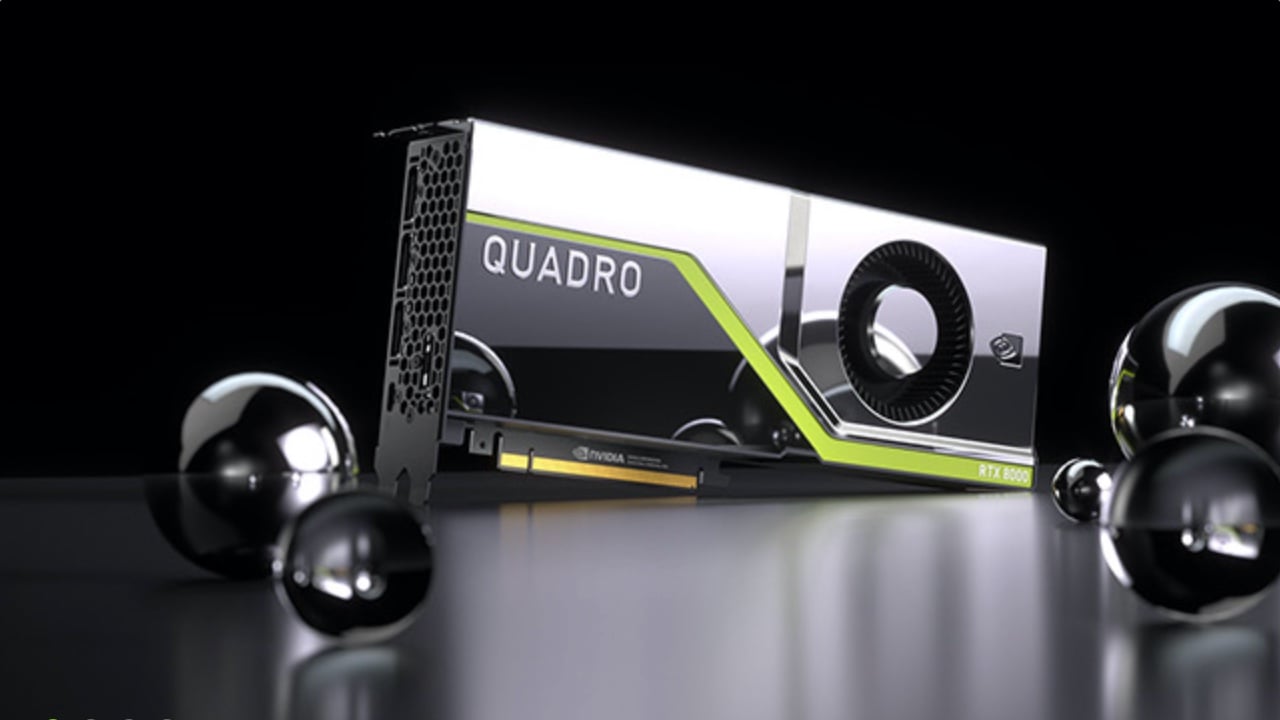

The second question is often which of the two major manufacturers, Nvidia and AMD, to go for. The reality is that both have products that are more than capable of supporting film and TV applications. Most system integrators offer Nvidia GPUs first (or in fact only), possibly because of their historical orientation toward professional and industrial applications. Both manufacturers have ranges marketed towards domestic users – Nvidia’s GTX and AMD’s Radeon series – and industrial and professional sectors with Nvidia’s Quadro and AMD’s Radeon Pro.

While the professional and domestic lines of each manufacturer tend to use the exact same core devices, it instinctively feels like a Quadro or Radeon Pro makes sense for colour grading. Particularly, these ranges are available with more onboard memory than the domestically oriented options. It’s rare to see domestic devices with more than 8GB of onboard memory, while a Quadro or Radeon Pro can have more than twice as much. The memory attached to a GPU is separate from the main system memory and is used by the GPU for the data it’s working on, which often amounts to several copies of various frames. Grading is hungry for GPU memory.

If we’re not on an infinite budget, though, it’s worth bearing in mind that 8GB is often enough so long as we’re not endlessly grading eight layers of 8K 120p footage. And beyond memory, the professional ranges don’t offer much else that’s of use to us. They can do higher-precision internal calculations, render antialiased lines to make CAD applications prettier, and a few other small things. These abilities are useful to architects, scientists and structural engineers, but, in general, are not, if at all, useful to colour grading applications. GTX and Radeon are fine and are considerably less expensive than Quadro and Radeon Pro.

Some things, such as hue rotations, blur and noise reduction, are far more taxing than simple per-channel colour adjustments

Nvidia’s new tech, and AMD’s big memory

The other thing to be aware of late 2019 is Nvidia’s RTX 2000 series, with the top-end RTX 2080 often considered for colour grading. The RTX cards include new technology for ray-traced graphics and artificial intelligence, as well as conventional GPU capabilities. The new technology is, again, not used more than slightly in colour grading, but Blackmagic has hinted that it might be at some point. An RTX 2000-series purchase, then, might be seen as a bit forward-looking, or it might be seen as future-proof, depending on one’s predilections.

Because of all this, a lot of RTX 2080s are being sold for workstations. An alternative at a broadly similar price is the Radeon VII. It’s not quite clear whether that’s a Roman numeral seven or not, or how long they’ll be available, but, crucially, the Radeon is available with 16GB of memory on board, which is extremely unusual for something priced at that level. Some benchmarks put the RTX a shade ahead in performance, though benchmarks are usually intended to establish performance in realtime 3D rendering and may not be reliable measure of GPU computing power as it would apply to post production work.

It’s complicated

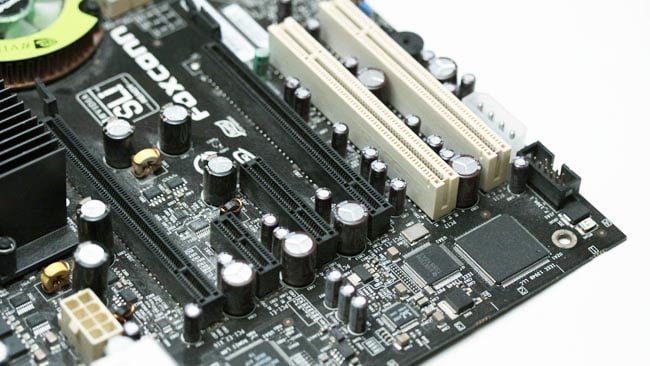

Remember this motherboard - the slot on the left, nearest the CPU, is where the first GPU goes. The second GPU goes in the other long black slot but will be slower on domestic motherboards

Of all the components I’ll discuss in this workstation series, GPUs are the hardest to really assess and most likely to change quickly. One of the subtler things that I touched on in the discussion of motherboards is the interface standard: both the RTX 2080 and Radeon VII use revision 3 PCI Express slots, as do many current and recent GPUs. Some of AMD’s more recent cards, such as the Radeon RX 5700, support PCIe 4.0, which was first supported by AMD’s CPUs. Again, the bandwidths are so shockingly high that the difference between PCIe 3 and PCIe 4 isn’t likely to make much of a difference, but PCIe 4.0 might feel more future-proof.

Let’s recap. We need to choose how many cards we want – probably one big one and one small one. We need to choose which manufacturer to go for (flip a coin,) and how much memory we want (as much as possible). The value of Quadro and Radeon Pro cards is questionable for our application. And finally, if in doubt, in September 2019, buy an Nvidia RTX 2080, which is likely to provide enough grunt for even quite demanding workflows. As in so much of modern computing, we are in the promised land where more or less everything is pretty good and arguing over the last five per cent can waste more money than it saves in render time.

Tags: Technology

Comments