Metadata was to be one of the big time savers of video and film production. But how effectively it works depends on how good the data that went into it was in the first place. Enter machine learning and AI, which could eventually provide the ultimate in fast and fluid searches for video.

At any exhibition of technology in late 2019, one exhibitor in five will be claiming some sort of association with artificial intelligence. Some of these products will be associated with AI only by the very narrowest of definitions, such is the power of the term to excite people. The term is only powerful, though, precisely because genuine AI really can do so much. Anyone who’s played with a Google Images account recently, and marveled at the ability of the system to identify the contents of one’s casual snapshots, can’t fail to have been impressed.

And yes, this tech is beginning to find use in film and TV work, particularly as a way to make material easier to search. Iron Mountain is a very literally-named company in that it provides long-term, highly-secure storage in an old limestone quarry. The quarry exists as a winding series of mineshafts driven into the titular mountain somewhere in the central states of the USA, and is patrolled by armed guards. It’s the sort of place you leave something if you want to be more than reasonably sure that it’ll be there when you go back for it. Worked-out salt mines were traditionally used for the storage of 35mm film negatives, to the point where “the salt mines” became a film industry euphemism for somewhere things go for a long time. It’s somehow reassuring to discover that very similar things exist to this day.

The meta-data conundrum

But Iron Mountain, like a lot of other companies, has realised that in the modern, bleeding-sky, blue-edge world of mediated enablism, archive has value. The problem with time-based media (sound, video) is that until now it has been exceptionally difficult to search based on a written description of what’s required.

Occasionally, someone will have added written metadata when the material was archived, though given the extreme ingenerosity of 2019 postproduction schedules, that’s likely to have been done very quickly if at all. And even if it has been done, the database can be of dubious quality. If someone wants footage of a red guitar, it’s going to be difficult to find any footage of a red guitar that we might have if the material is tagged “Roy Orbison,” regardless of whether said songster is actually wielding a suitably-coloured instrument in the footage we happen to have.

Computers, or more specifically AIs, ought not to make these sorts of mistakes; it ought to be possible to have the machine characterise and annotate everything that appears in a piece of footage.

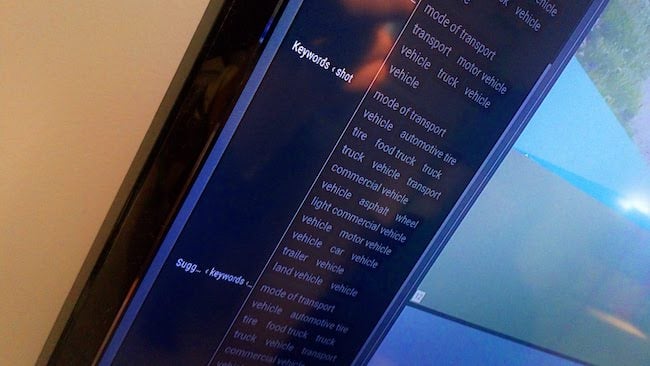

Iron Mountain uses Google’s AI base to do this, and it seems, based on test footage chosen by the company, to do a reasonable job. Its interface provides reasonably low-level, granular access to the information generated by Google’s bot, and it will not only return search results for Roy as “Orbison,” with a “red guitar,” but also “poster,” “stage,” and “person in the front row eating a hot dog.”

Clever.

The dark side

There is, however, a darker side to all this. AIs need training and nobody has access to more training data than Google. We’ve talked about how neural networks operate before, and it’ll be clear to anyone with even a basic grounding in the technology that many types of AI are a black box. Taking a neural network as an example, it’s defined by a very large number of weighted interconnections between layers of nodes. The weights of those interconnection are established by exposing the network to a large amount of training data that we already know about, and adjusting the weights until the network reaches the right answer. Apply the results to unknown data, and it should still reach the right answer.

It is a hugely powerful technique, but the result is a vast maze of interconnected nodes. Looking at the weights assigned to each of the connection reveals something that looks a lot like random noise. The result is that a neural network, or many other kinds of AI, may give a good answer or occasionally a bad one, but it is effectively impossible to find out why it gave any particular answer. Like a real intelligence, a human being, it is the sum of its experience - its training data - and beyond that, its thought processes are fairly impenetrable.

Care needs to be taken

Much advanced research is being aimed at analysing artificial intelligence, but until that time, there’s a need for significant care. Like an inexperienced human - a child - an AI might make embarrassing mistakes. AIs have already made embarrassing mistakes by confusing humans and animals. It’s not an easy problem to solve, because the reasonableness of some requests depends on the reason for making them. A human might treat a search for the term “asylum seeker” rather differently if the request came from a right-wing political group as opposed to a charitable organisation dedicated to assisting asylum seekers, depending, of course, on that person’s own politics.

AI works hard enough just to identify subjects in images; asking it to understand the socio-political background of those images, let alone arrive at a politics that the person in charge subjectively likes, is a whole new level of complexity. We raise humans for eighteen years or more before they’re trusted to make that sort of decision, and even then, it’s a minefield.

Google, of course, and Iron Mountain by association, is not blind to these issues, and Google’s AI has been carefully guarded against the most obvious problems and abuses. In conversation, Iron Mountain gave examples of searches it had been asked to perform that it had refused to do on ethical grounds. Still, it’s difficult to circumscribe every possible problem question or foresee ever mis-categorisation by the AI.

The technology works well - it’s certainly useful and important enough to tolerate the problems we’re talking about, which are pretty rare in practice. Still, for the time being, it’s probably best to think of an AI in the same way we’d think of a well-trained dog - useful, faithful, and trying to please, but not something to leave in charge of the house overnight.

Tags: Technology

Comments