The recent progress of artificial intelligences put such technologies on top of the media hype cycle. Their new cognitive abilities and extended capacity to deal with complex tasks could benefit the production domain a lot. However, as with any technology, AI has limits: its lack of empathy and common sense create obstacles on the path to a fully autonomous method of creating video content.

Science fiction planted in our collective subconsciousness the fear that AI could take over humankind. We are not immediately exposed to this peril, but current algorithms and robots raise a question: what can we do that AI could not do? In companies, the response is a Stakhanovist computer applying processes previously managed by a human, now without a distinction between white and blue-collar jobs.

From tech giants to startups, many firms turn to these promising technologies. Audiovisual production is an industry among others with a creation process: write, film, edit, and broadcast. For each step, AI technologies can play a role. Technically, what is new?

Scholars and engineers taught computers how to learn, that's it. They cannot learn by themselves because they need human supervision. Combining the techniques of machine learning and gigantic databases, the "Big Data", computers can detect patterns in a data mass that even a copying monk couldn't find in his whole life. Above all, deep learning techniques marked a new milestone for computer vision, that gave computers the ability to "understand" digital images by relying mostly on pixel patterns.

AI can therefore detect, recognize, categorize and describe persons and objects. The applications are readily found in post-production where files are stored increasingly in the cloud, and are easily manipulated. Computer vision may reduce time spent on chores like archiving, tagging or finding images.

Rushes are now semantic objects that can be analyzed easily and searched while filtering: visible persons and objects, feelings displayed and of course pronounced words, but also what takes place in the scene. Google published the AVA data model, an outstanding one from a granular point of view. Trained on YouTube, the AVA model contains 80 "atomic" visual actions like riding a bike, handshaking, kissing someone or playing with kids. Anyone that had to binge-watch hours of rushes to find the perfect shot will easily understand what a game changer this is.

What happens if there's no reference?

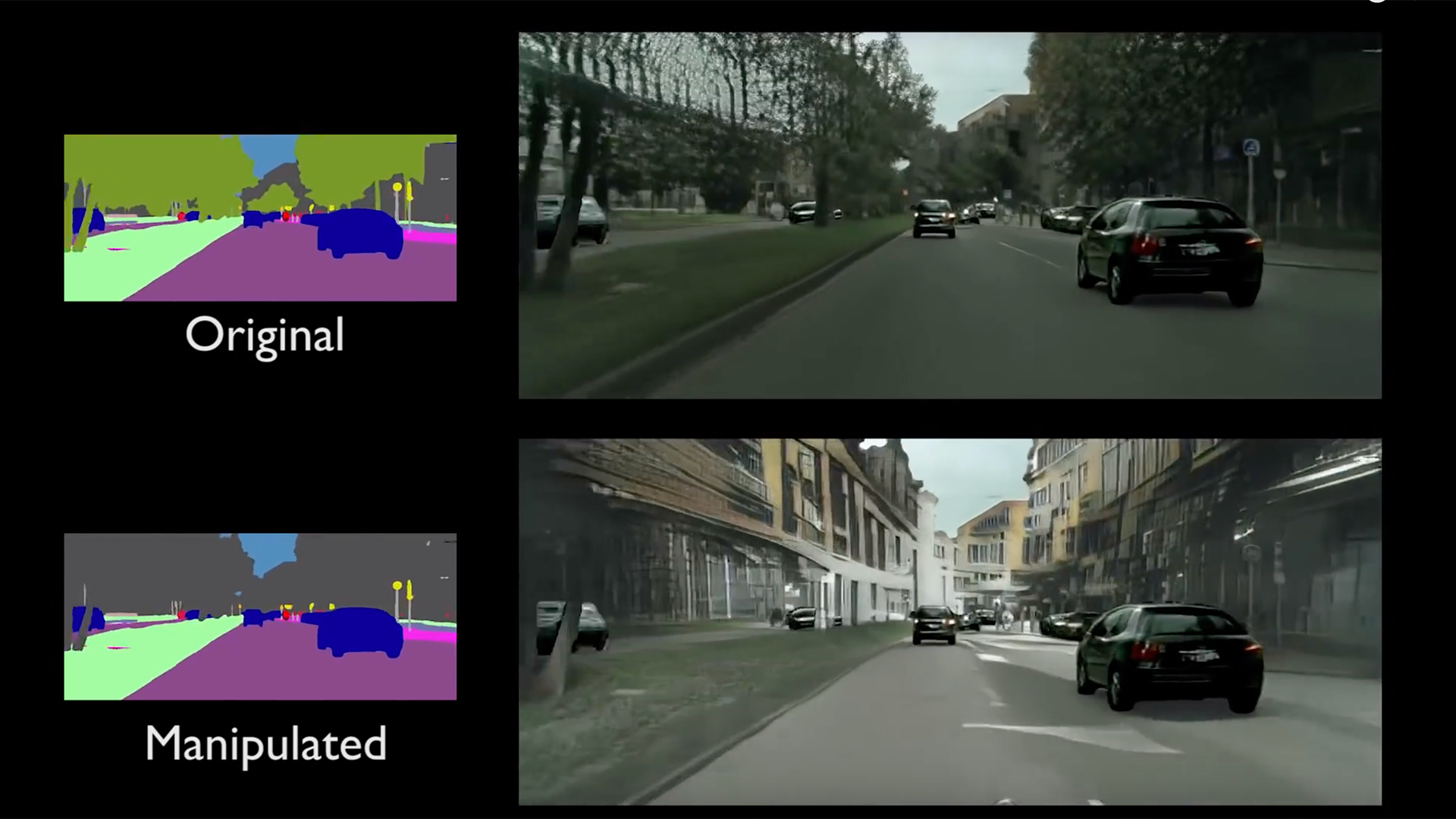

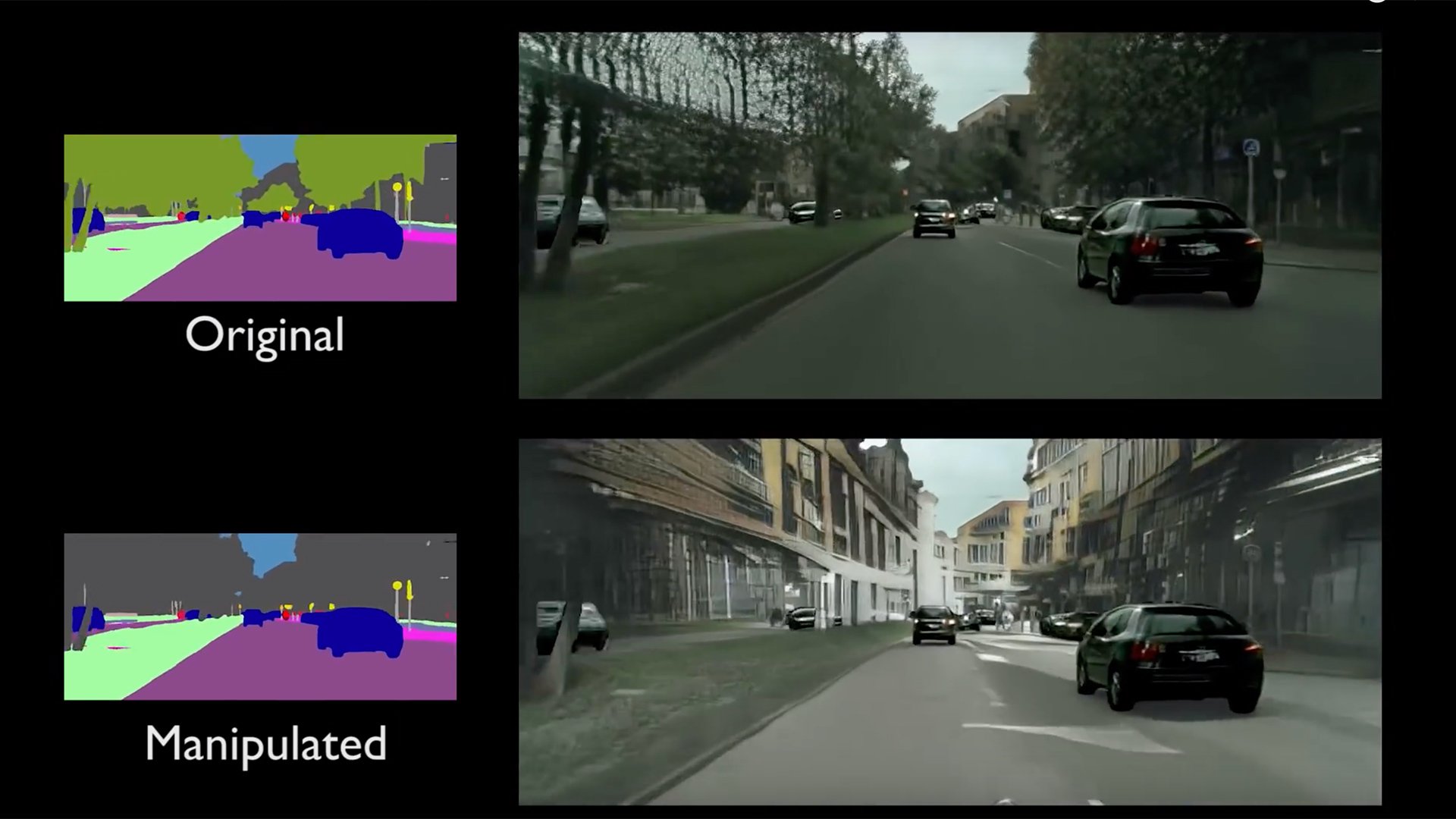

If no image has yet been shot, it will be possible to create it. AI known as GANs are now capable of putting pixels together in order to create a face as human as yours. They are currently limited to stills in standard resolution. In my opinion, in the medium term, it could challenge the necessity of filming. Why would you film scenes and retakes, if you could generate it on a computer and modify it at will instead? NVidia engineers can already map scenes and change elements, such as the background of a shot. AI has the capacity to create artistic forms. DeepMind's pictures prove it. You may or may not wish to hang it in your living room but it still "looks like" something created by a person. However, those art forms are no good if you wonder about their inner meaning.

Most AI is indeed based on combinatory or mimetic approaches. They work by joining pieces together or by replicating structures they perceive in data. It is efficient in music or graphics creation. But in script writing, this approach is less effective. Short movie "Sunspring" demonstrates it. Fed with hundreds of sci-fi scripts, an AI wrote a script that was filmed. The gibberish result is entertaining and shows another limitation: the combination of data (words in this case) does not make a story, as it relates to the infinite monkey theorem.

Of course, "Sunspring" would not have looked like a Monty Python skit if its "authorobot" had the intelligence to understand the nonsense that it wrote. Machines miss the common sense needed to understand that, for instance, in "Wallace and Gromit enter the room", Gromit is a dog. Of course, it could be taught to distinguish human names from pet ones. But what use is an AI machine that you have to teach everything to? "AI does not exist" claims French mathematician Luc Julia (the "grandfather" of virtual assistant Siri), "You have to show a thousand cat pictures to a machine before it starts recognizing one. A kid needs one time". This level, called artificial general intelligence, is a goal that is far from being achieved.

Filming automation

Filming is a domain where automation is already in progress. In my view, remotely controlled cameras are a prelude to self-operating ones, at least in TV studios. A good example is the Dutch firm MrMocco's robotic arm that frames and follows any person in the camera's range using torso and limbs as beacons. Such framing capacities are also available in Ed, a BBC recording system primed at IBC 2018. Ed can also direct a program, say its creators in a paper that is well worth reading.

Even if these solutions are appealing, one must not get too excited. The R&D team from the BBC writes that Ed was developed for live panel coverage, and it was not expected to perform well on another type of production like a concert. Another way to put is to say that AI is only as smart as the data that it has been trained with. Live TV specialists may be concerned since AI may not react well in case of unexpected events during a broadcast, another hazard to manage! That's why designers obviously ensured that a human can resume control at any time. That also means that before AI can take your job, it needs to be specifically trained to, which requires lots of data.

Secondly, visually based fields require editorial and artistic sense. The Beeb R&D team puts it this way, "Production is at least as much Art as Science". AI could be shown, like some have been trained to paint like Van Gogh, but can they actually innovate as he did? It is important to remember that AI creates things out of pre-existing data and is therefore narrowed by it. My thought is that automation could lead to a form of standardization of artistic aspects.

Editing

The point above is also true for movie editing. For simple videos, the job can be easily automated. Wibbitz, an Israeli startup, sells a solution based on text analysis. Matching press wires and video databases, the solution clips relevant rushes to create a subtitled video. It gets more complicated when the editing is sophisticated. Why? Because circuit boards lack empathy. IBM's AI, Watson, can analyze five distinct emotions. After being properly trained, it selected shots to be edited for the "Morgan" trailer. It did so by relying on emotion spikes, but in the end the trailer was edited by a human. Movies need to combine emotions to tell a story, and humans are much more skilled at this task for the moment. This field of AI, known as affective computing, is still a young one in the academic domain.

So-called artificial intelligence finds itself limited by an absence of fundemental human skills. That is why I think we can better refer to AI as a "cobot", firstly because it means "collaborative bot", which leaves professionals in control, and secondly because it could also mean "cognitive robot", a more accurate description of current AI capabilities.

The audiovisual industry produces information, entertainment and art, which are human domains that we should not outsource to machines so easily. After all, "machine" means "stratagem" in Greek. The human hands that conceived AI are exposed to errors, bias and failures. A fact that we tend to forget, reassured by the scientific, calm and neutral look of computers.

Tags: Technology

Comments