Replay: This was first published in 2013, but the discussion about sensor technology is as relevant as ever. Just how can sensors improve further?

Recently I've been expressing the view that all cameras are pretty much the same, because they all use the similar technology (give or take) for their sensors. I don't mean to be unnecessarily cruel about the work of camera manufacturers – taking an electronic component such as an imaging sensor and making it into a usable tool is far from trivial. Still, the absolute performance of cameras is determined by what you can get off the lump of silicon behind the lens

According to some people, though, creating sensors with ever larger numbers of pixels – is also becoming somewhat commoditised.

I first encountered this opinion in a discussion about Red's upcoming Dragon sensor, in which someone offered, for $2.5M, to produce a sensor of equivalent performance in a year and a half, even adding global shuttering. The brave soul making this claim was David Gilblom of Alternative Vision Corporation, based in Tucson, Arizona, a company specialising in imaging sensors. Among many other things, they're the reseller for the well known Foveon X3 sensor, which avoids the problems of bayer-filtered sensors by stacking the red, green and blue pixels one atop the other, but isn't seen much in the world of film and TV.

A bold claim

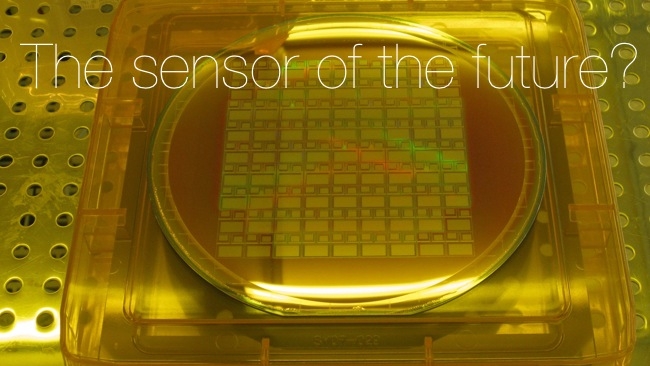

Supporting this bold claim is a new technique for manufacturing imaging sensors which allows the light sensitive and processing parts to be treated separately. Developer Lumiense Photonics calls it SiLM, for silicon film (without, I suspect, any intention to compare it to the photochemical imaging technology). The aim of SiLM is to “remove most of the need to make compromises among the various important imaging performance parameters, [allowing] them to be optimised nearly independently.”

In English, and not to get too deep into the details of manufacturing microchips, the crucial advancement is that this new approach allows the array of photodiodes which form the pixels to be stacked on top of a completely separate device – or perhaps more than one device. This is a technique distinct from the Foveon sensors, and would still require a Bayer filter, but there are significant advantages in separating the light-sensitive photodiodes from the controlling electronics, providing, as Gilblom says, “greatly improved linear dynamic range and elimination of motion artefacts”.

Stacking

Current approaches to stacking up semiconductor devices such as memory lack the fineness of pitch necessary to connect such a large number of tiny pixels to the control electronics beneath, meaning that the electronics have to be on the same layer as the light sensitive devices. This means that the entire surface of a current CMOS sensor cannot be light sensitive; part of it must be used for control electronics, with the result that the pixels do not meet edge to edge – in the terminology of the field, the fill factor is less than 100%. This exacerbates aliasing and reduces the size of the pixel, thereby reducing dynamic range and sensitivity. It also suggests one reason why many modern cameras lack a true global shutter, because the single switching transistor that is required for each pixel consumes still more area on the silicon and reduces performance still further. Even then, the shutter control transistor is itself somewhat light sensitive, as are all silicon semiconductors, meaning that the ratio of shutter off to shutter on is possibly only about 50,000 to 1 – the shutter is, in effect, slightly transparent, and a bright sun might be able to punch through in a way that would be impossible with a mechanical shutter.

More crucially, the method by which a conventional CMOS sensor is built must be the same for all parts of the device. The familiar acronym refers to a semiconductor manufacturing process, a particular combination of materials and techniques. The materials and techniques used in the CMOS process are not ideally suited to producing high performance photodiodes.

100% fill factor

The ability to separate the layers and treat the photodiodes independently is therefore valuable, as is the option to put the electronics on a layer behind the sensitive parts. Crucially, all of the electronics can be shielded from interfering light, producing a 107 shuttering efficiency, and a reflector can be placed behind the photodiodes such that any light which goes straight through can be reflected back out, for a second chance at detecting it. This is rather like the tapetum lucidum in the eyes of animals, which creates the characteristic rabbit-in-the-headlights shine, although whereas animals suffer a resolution falloff due to the varying refractive index of the retina and the material within the eye, the refractive index of silicon is so high that no such problem is anticipated in imaging sensors. Perhaps most enticingly of all for film and TV applications, the big pixels provide a near 100% fill factor and therefore greater dynamic range, lower noise, and “eliminates the need for microlenses thus always presenting a smooth surface with a high acceptance angle to the optics”. This means that the classic, film-oriented lenses cinematographers sometimes choose, which don't necessarily present light to the sensor at right angles (strictly are not image-space telecentric), should not vignette.

Lumiense have already made test devices using a three-wafer stack, with one for the photodiodes, one with control electronics, and a third backing wafer which is kept reasonably thick for mechanical handling purposes, but can still have devices on it. This represents an absolutely enormous increase in the amount of space that's available for electronics, so expect smart sensors to get even smarter and global shutter to become the norm. Sony are also talking about stacked sensors – it wouldn't be surprising to find that the sensors in the new F5 and F55 cameras use the technique – but not with the same level of sophistication.

Much as Kodak can tell you about nitrates, T-grains and colour couplers, Alternative Vision can talk the hind leg off a donkey about diodes, oxide layers, indium ball bonds (yeah, yeah), wafer sizes, and all the other minutiae of sensor manufacturing.

"Removes the need to make compromises"

Alternative Vision’s David Gilblom told RedShark:

The SiLM sensor technology removes most of the need to make compromises among the various important imaging performance parameters since it allows each of these to be optimized nearly independently. Removing the circuitry from around the photodiodes permits application of high-efficiency anti-reflectance coatings for maximum photon capture and eliminates the need for microlenses thus always presenting a smooth surface with a high acceptance angle to the optics. Freeing the circuitry layer from area-hogging by the photodiodes opens up room for global shuttering (with sub-microsecond shuttering times), increased charge storage and in-pixel noise cancellation. The result - increased natural ISO speed, greatly improved linear dynamic range and elimination of motion artefacts.

And when can we have cameras with this high performance new technology? Well, the prototypes were made in 2009, funding for productisation is apparently imminent, and after three to six months the first device will be a 4K by 4K sensor. Presumably an enthusiastic company could have a camera on set some time in 2015.

This is not about Pixel Count

The thing to realise about all this, though, is what we're talking about here is a way to offset some of the problems of existing CMOS sensors. This is not about pixel count, it's about global shuttering, sensitivity, dynamic range, and possibly frame rate – the important stuff that isn't really addressed by the race for resolution that's characterised sensor design to this point. One of the things about CMOS sensors is that more or less anything is available to someone with a shockingly small among of R&D money, especially in terms of scaling pixel count and frame rate (since frame rate is just a matter of how many parallel pipes of data are coming off the chip). As I think has been proven recently, ever increasing pixel count is less useful than it once was: that war is won, and it'll be interesting to see what the next few battles are.

Tags: Technology

Comments