Camera tests that point intra-frame codec cameras at moving targets to test compression are futile.

Camera tests that point intra-frame codec cameras at moving targets to test compression are futile.

The internet is full of camera tests, particularly ones that test codec compression. But you can't test this in the ways that it is often thought you can.

Why would someone shooting a camera test want to point that camera at moving leaves on a tree or falling water? The clue is that it's intended to be a test of the compression codec that's being used, but often that's a mistake: a moving subject frequently doesn't make any difference to the way the compression works. For anyone who's ever got into the details of how video compression works, the reasoning behind this will be clear, but it's probably worth going over the basics.

The intent of a test involving a lot of motion is, in principle, a good idea. Most of the video we watch, including digital TV, over-the-top services like Netflix and Amazon Prime, and web video, use compression techniques that take advantage of the similarity between nearby frames. This will be a recap for many people, but it's worth being clear: statistically, most of the content of most shots is nearly static, moving around only due to noise or tiny motions of the camera, and that's something we can use to achieve better compression.

Modern distribution codecs don't usually just copy parts of one frame into another. With real-world data from cameras, there will always be noise so that the chunks of image will only nearly match, so there's usually some tolerance. Also, codecs often slide blocks of image around to follow camera or subject movement, storing the direction and distance of movement. At very high compression rates, the tolerance for a near match becomes quite large and the precision of movement stored quite low. That can create visible discontinuities in the image blocks of not-quite-identical picture floating around, but the compression gains can be huge.

That's inter-frame compression, and yes, a picture with a lot of motion in it will work such a codec hard and create artefacts that most of us have seen in particularly harshly compressed YouTube videos.

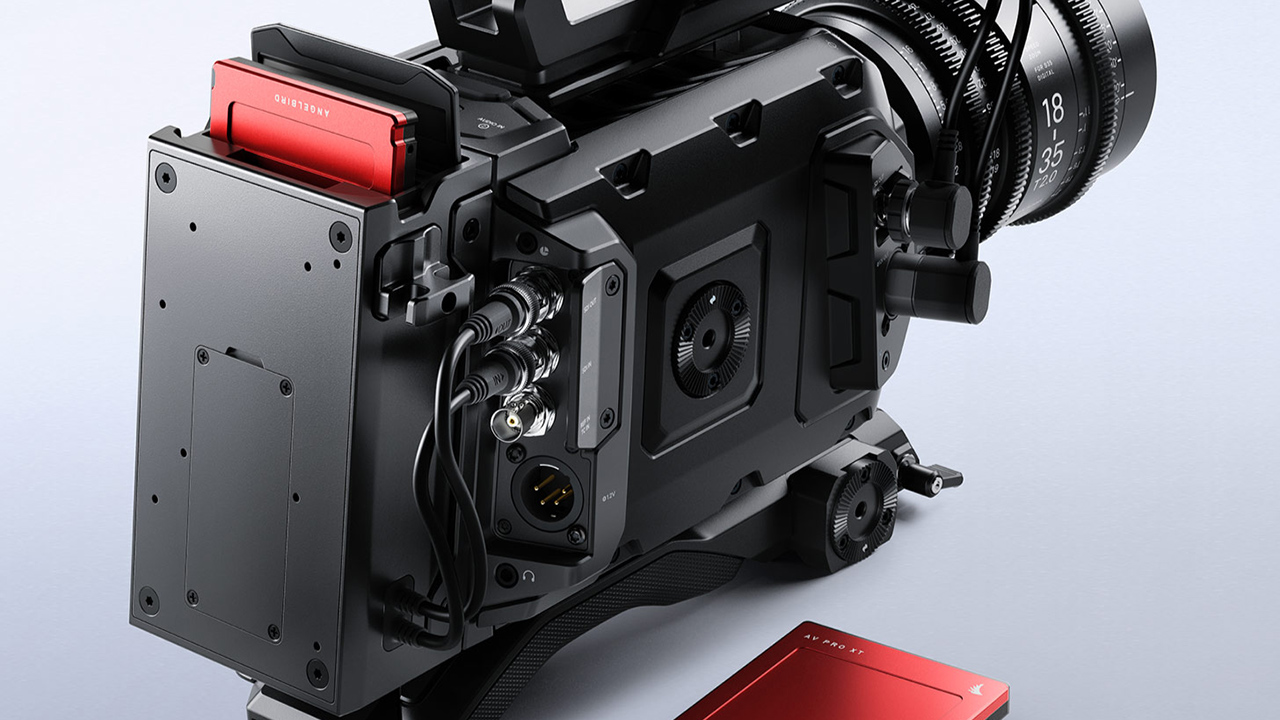

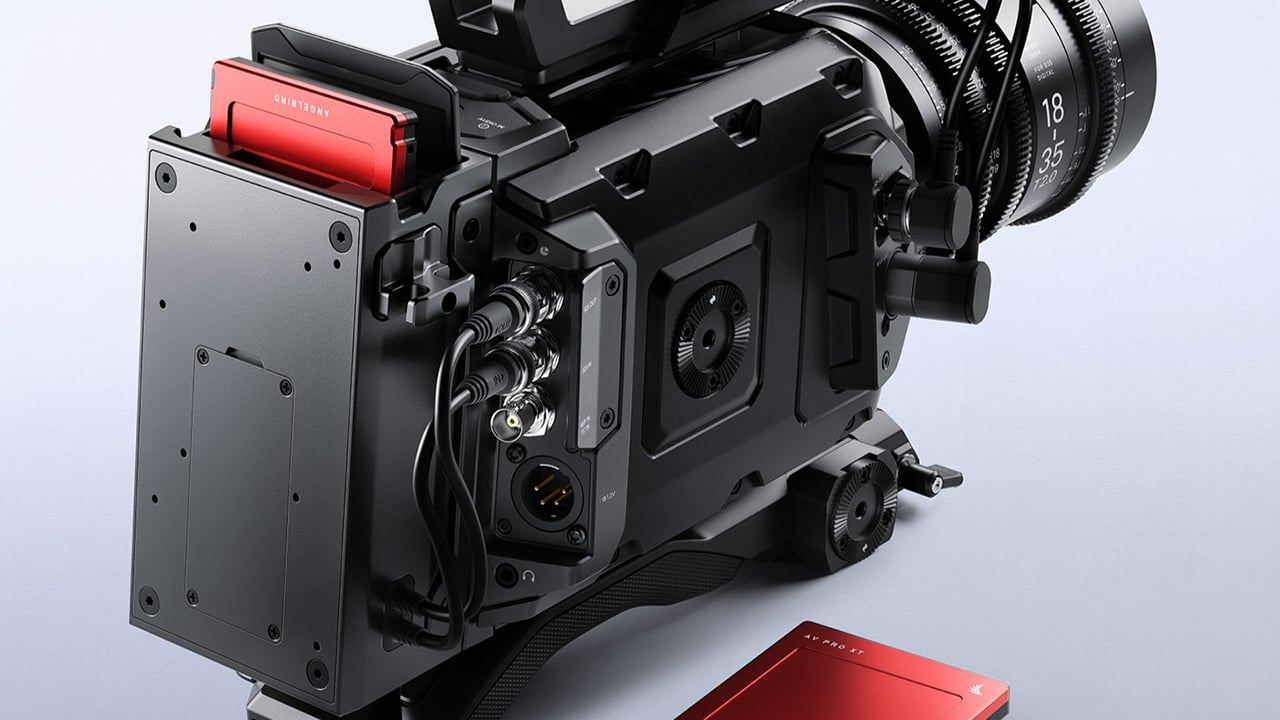

The thing is, these are techniques mainly used for distribution, not for acquisition. There are exceptions, including DSLRs, variants of XAVC, AVCHD and cell phone camera apps, all of which use inter-frame compression (and all of which are subsets of MPEG-4 AVC/H.264.) It's done because cost-constrained devices usually want to use the least possible flash memory to store the maximum possible runtime of media, but that approach is rare at anything other than the most cost-conscious end of the market. ProRes, DNxHD and essentially all raw formats avoid inter-frame compression, so their performance on rapidly moving scenes is identical to their performance on completely static ones.

The intra-frame difference

Pointing an intra-frame (as opposed to an inter-frame) codec at a tree full of gently waving leaves, in the hope that it will stress the recording, represents a misunderstanding of what's going on. Naturally, there are things that will work an in-camera codec harder, particularly pictures with lots of fine detail and pictures with lots of fine detail that takes up the whole frame. Many intra-frame codecs use slightly different compression in different areas of the frame, perhaps assuming that part of a picture might be mainly sky or mainly a wall, or mainly out of focus. That's a technique used on everything from JPEG up. Fill a whole frame with fine detail and the codec works harder, but it doesn't have to change every frame.

It's usually possible to see the difference between inter and intra-frame codecs by looking closely, especially with the contrast wound up. An intra-frame codec that doesn't look at multiple frames will tend to produce artefacts that fall on a grid, often eight by eight pixels, and which don't move around. Inter-frame codecs produce artefacts that slide around. Motion in the image can sometimes make the block edges more visible, but there's no requirement that the whole image changes every frame, even if that was a realistic simulation of any likely real-world scenario.

It's worth a quick appendix to make something else clear. Because it's mainly used on lower-cost cameras there's a popular impression that inter-frame compression compromises image quality which isn't really true. It's valid to assume that two pixels right next to each other in space might have similar values which is how all compression works. It's just as valid to assume that two pixels right next to each other in time might have similar values and that knowledge can quite reasonably be used to improve the bitrate-to-quality ratio.

The reasons that inter-frame compression is avoided by all but the cheapest cameras is a little fuzzy and perhaps somewhat political. Better cameras have more flash and are more able to simply throw bitrate at the problem, avoiding the extra complexity of compressing with inter-frame techniques. Lesser cameras tend to have less storage and in 2018 CPUs are cheaper than flash. It's a tradeoff between complexity and bitrate. If it's not clear why a big, expensive camera couldn't have lots of flash and lots of processing power, and use inter-frame compression to improve the signal to noise ratio across its codec – er – well – yes, that's a pretty valid question.

But while most of the acquisition is done with intra-frame codecs, it's not necessary for test subjects to move.

Tags: Technology

Comments