Realtime ray tracing used to be the Holy Grail of 3D graphics. It was once thought impossible, but recent demos are suggesting otherwise. Even if it might require a lottery win in order to do yourself!

The events which concern GPU computing are mainly those connected with video games, particularly the Games Developers Conference (GDC). Often we overlook this because it happens in late March, just before NAB, but this year, two noticeable things happened at GDC.

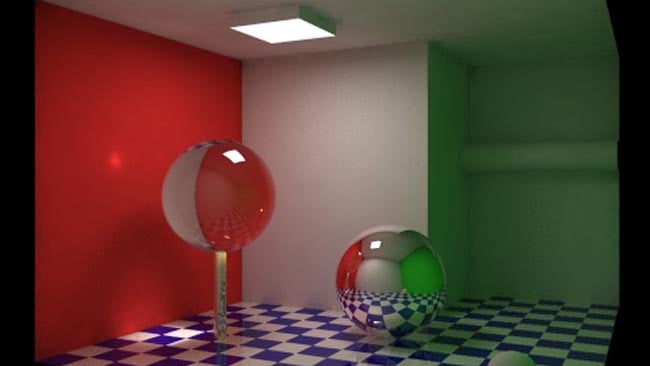

Shiny Objects - the purpose of raytracing. Courtesy Nvidia

The first was a lot of buzz about Nvidia's next series of cards, which are widely expected given that the GTX 10-series was announced in 2016. This is perhaps of less interest to film and TV people, since most of the applications we have for GPUs were barely taxed by the 9-series, let alone the presumably expanded capabilities of the future. What's possibly more interesting is the growing discussion of realtime raytracing. It's is unusual because GPU computing in post production is very rarely actually asked to render graphics in the same way they are for games. It does happen, as in plugins such as Video Copilot's Element 3D, but mainly we use GPUs to do abstract numbercrunching, not to render polygons.

AMD's Radeon Prorender, based on the Rays technology, is designed to make offline rendering of raytraced images faster. Courtesy AMD

To understand why raytracing is interesting, we need to understand why it differs from the sort of techniques normally used for video games. Explanation of computer graphics going back to the 1970s and 1980s tended to show rays of light being calculated shooting out of virtual lights, bouncing off things, and ending up going into the lens of a virtual camera. That, broadly, is raytracing, but it's horrifically time-consuming. Consider a real-world light; a vast number of photons are coming out of it over the fraction of a second representing a single video frame. If those photons bounce off a surface that isn't perfectly mirror-finished, they may go in a variety of directions, at random.

Nvidia's RTX technology, here running on an extremely high-end system, uses at least some raytracing to produce a Star Wars-themed scene. Courtesy Nvidia

Huge calculations

Calculating that huge number of rays simply to find the tiny proportion that happens to end up landing in the relatively small aperture of a camera lens is utterly impractical. Even very careful raytracing programs tend to work the other way around – rays are fired out from the camera, at least one for each pixel, and hit objects, which gives us much the same result. It's then simple, in principle, to calculate a ray going from the surface of the object to all the light sources in the scene. If those rays go through anything opaque, the object is in shadow; if not, it's illuminated by the light. If the object is transparent, rays of light going through it can be calculated, and this approach can take into account effects such as refraction in transparent objects and diffusion from matte surfaces.

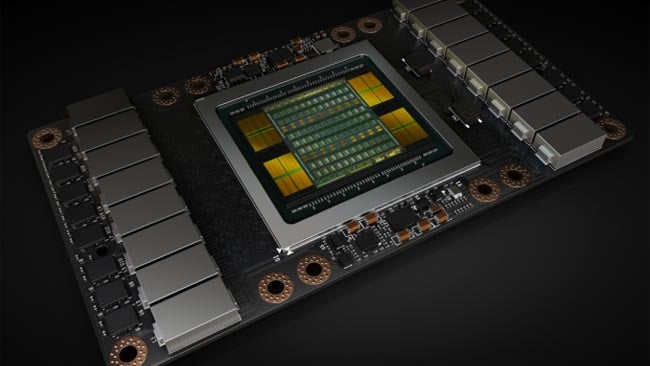

Nvidia GV100 GPU. A lot, but still not enough (and this is, ironically, a rendering)

Simple in theory

Raytracing is, then, pretty simple in theory, and recreates reflective and transparent effects fairly accurately. It's actually simpler in concept than the sort of tricks used by video games, but the problem is that the number of rays to calculate can be huge. That's especially so if we want to be able to see reflections of reflections, and have to carry on calculating the way the light ray bounces off several objects in the scene. Often, the maximum number of bounces is a user setting which trades off accuracy for performance.

Things get even more complicated when we consider that diffuse (matte) surfaces such as a sheet of paper don't look reflective because photons bounce off them at a largely random angle, due to microscopic roughness. Simulating that requires a large number of light rays to be calculated for each point on the surface of the object, each assumed to bounce off at a different, random angle, all averaged together.

This image, rendered by Grzegorz Tanski, is the canonical example of global illumination. Notice that the coloured walls cast their colour onto the floor and ceiling

This approach allows, say, a red surface ball to reflect a glow of red light onto the white surface it's near to, which looks great (above). This is where terms like “global illumination” come in, though, and it requires absolutely astronomical numbers of calculations. Reducing the number of angles calculated for bounces off a diffuse surface can mean we then aren't averaging enough numbers to get an accurate idea of the brightness of the surface at that point. This makes the image look speckly and noisy.

It's possible to raytrace reflections and refraction in a scene without that sort of global illumination, but the problem remains: calculating all those bouncing rays is impossible to do in real time. It's often been too slow even to do on offline-rendered graphics. The calculations are highly repetitive and are somewhat amenable to GPU computing themselves, so things have sped up a lot recently, but even then, raytracing is very, very hard work.

An alternative

The alternative is simply to calculate (at least) one ray for each pixel on the screen and figure out what it hits. If that object is assigned as red, paint the pixel red. That doesn't even simulate lighting, so add in some simple calculations to brighten or darken the pixel based on how nearby lights are, and what the angle is between the light and the object. That doesn't get you reflections, refractions or shadows, let alone global illumination. These are things which were, until recently, noticeably absent from video games. Even now they tend to be roughly approximated using fast, abbreviated mathematics, or are precalculated when the game is being designed.

The issue that confronts us in 2018 is that the number of things we want to simulate, including reflections, refractions and global illumination, are starting to add a lot of complexity. They're certainly more complex and involved than the simple, if bulky, mathematics of raytracing. To this end, companies such as Microsoft, with DXR expansions to the DirectX 12 3D rendering libraries, Nvidia with RTX on the new Volta technology, and AMD with Radeon Rays and the derivative Radeon ProRender technology. So, does this mean that pre-calculated raytracing for visual effects work and realtime 3D for video games are likely to converge in the next few years?

Not really, no. It's been talked about for ages, but the demands of raytracing are still well beyond what big workstations can do, let alone home computers, let alone consoles, let alone tablets and phones. Recent demos have relied on massively upscale workstations worth a year's wages using enormously exotic hardware, particularly Nvidia's Volta-based Quadro GV series. Given that Nvidia have just changed their licensing agreement to prevent people using games-oriented GTX graphics cards in server farms, we might assume that the company is keen to keep its higher and lower-end product lines separate. And at the moment, raytraced scenes at 24fps are firmly the province of the high end, and seem set to remain that way.

Tags: Technology

Comments