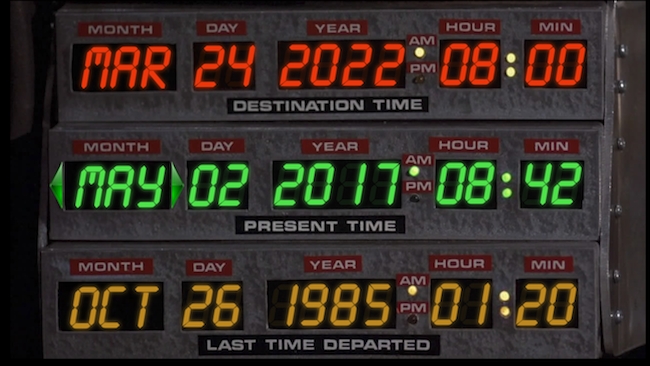

That’s not a typo. We've just finished NAB 2017, but what can we expect to see in five year’s time? And are the trends visible now? From computational imaging to augmented reality to object-oriented video, David Shapton thinks they are.

It’s getting harder and harder to predict anything. Technology — and the intended or unintended effects of it — has made the world less predictable and in some real sense, less stable as well. It’s possible that some of the recent political surprises wouldn’t have happened without social media and the ability for this new medium to carry video.

Many of the trends in technology are now well established. Nobody’s going to be surprised to hear that by 2022 4K will be universal, even in smartphones, and 8K will be approaching the mainstream. I personally don’t think there will be 16K, except in very specialist and esoteric circumstances because I think that other types of video will get there first. (See below!)

But watching the trends doesn’t tell you everything, although it’s a good start. Increasingly, technological change is happening in bursts, unpredictably. Surprisingly, perhaps, this is predicted by exponential progress: the idea that technology is on an upward curve because each generation of it provides the smartness, the resolution or the computing power to create the next, better, generation. Ray Kurzweil in his seminal book “The Singularity Is Near”, despite being written over a decade ago, is pretty much spot-on with this. We are, he says, headed for a “Singularity”: a sort of event horizon (to use the term with a lot of licence) beyond which we can’t predict anything because the rate of change is too fast. This is just one interpretation of Singularity, but it’s the most useful and least fanciful one for our purposes.

Connecting the dots

The practical mechanism that enables technology to leap ahead unpredictably is that we’re able to look at a bunch of disparate products and systems and easily connect them to make something that’s much, much more powerful. Take Uber, for example.

Uber depends on GPS, smartphones, wireless internet and a massive, distributed database. Uber only had to create one of those: the database. In doing so, they’ve taken off in a helicopter and looked at the technology landscape from a new perspective; one not visible from the ground. And what they’ve done is amazing: with remarkably little effort, they’ve created an industry-disrupting product that is totally dependent on all those things they didn’t invent. Whatever you might think about Uber, it is now probably the best example (that I can think of) of the way that we’re going to progress in the future.

And what happens when you have several or many companies with similar provenance to Uber? Then someone will probably go to the next level up, connect all the Uber-level companies and invent something even more surprising and even more disruptive.

Meanwhile, back in the land of film, TV and video, we’ve seen enough changes over the last decade to last for a century. Except that we can expect even more change in the next decade than we have seen in the last hundred years or possibly ever. That makes it very hard to predict five years ahead.

Predictions

But here are some things to look out for.

1. Computational imaging: the ability to take relatively poor or low resolution from multiple lenses and “compute” an extremely high-resolution image — quite possibly with the ability to focus and reframe in post production. Light with their L16 camera, soon to be shipped, is the best example of this so far. This is a genie that is out of the bottle. It will change everything. Lens makers should feel concerned, although I think they will always have a role.

Light Field photography should probably be included here too. Lytro is an interesting mover in this space and if it manages to gain traction with professionals, we can expect some big changes.

2. Augmented reality. To some, this is a bit of a bore. It’s been talked about for a long time and has largely failed to deliver, except the latest iteration of Pokemon, which is not exactly state-of-the-art.

Don’t write it off. It’s likely to be bigger than Virtual Reality. Seriously.

One of the many issues with VR (as opposed to AR) is that it blocks everything else out. That’s fine if you want a truly immersive experience. You can’t spend your whole life in VR, but you conceivably can spend your whole life in AR. Effectively, the world out there will become your display space.

A lot needs to happen to make AR universally acceptable. In fact, there isn’t room here to go into even a tenth of the detail that we would need. But it’s all moving that way. Facebook has just announced their AR project. Microsoft has HoloLens. And others are beavering behind the scenes with astonishingly generous investments behind them. This space is not standing still: it’s poised to go into orbit.

3. Object-oriented video. Quite simply, this is what replaces pixel-based video. It replaces 2D video. It replaces all video resolutions. It is difficult and it will take some time but it will start to happen very soon. In some ways, early AR demonstrations show at the very least the need for OOV and perhaps the beginnings of how it might happen.

At the risk of sounding like a SciFi movie trailer, imagine a world where a video signal (a recording or a live feed) contains not just the 2D image on the surface of a sensor, but information about the 3D shapes, textures and lighting of the scene. Imagine that it’s like a video game, but more detailed and based on reality.

If this sounds fanciful, it largely is at the moment. But there is nothing in principle that would stop it from happening and when it does, it will free us from all resolutions and frame rates and will allow artificially generated objects to integrate perfectly with the real world — perhaps “holographically”. (I’ve put the holographic reference in quotes because it’s one of the most misused terms in the whole field of imaging, unless you’re prepared to allow it to be defined as “any 3D image that changes to reflect a viewer’s position” or something like that.)

Ultimately video will become a re-synthesised and resolution-free representation of the real world. It may become the world that we live in or perhaps one that we merely assimilate as an essential part of the way we relate to the world. What does “re-synthesised” mean? It means that a “model” of an object, and its behaviours, is created that simulates the real object in all relevant aspects. It’s a bit like the way that it’s possible now to create an acoustic model of a piano that is very nearly as good as the real thing, even (especially!) down to the way that when you play a single note, other piano strings — and even the frame of the piano — vibrate to give an incredibly complex “compound” sound. Physical models can do this. Re-synthesis is the process of creating a physical model in software virtually automatically.

There is much more to say about this. There isn’t room here. Artificial intelligence and machine learning will play a big role. Think about it: a truly intelligent robot that’s able to emulate a human being will need a human-like “image” of the world in order merely to exist. There is so much research going into computer vision for robotics and driverless cars right now that it will touch every part of every imaging industry.

This is going to be fun, and probably a bit scary too.