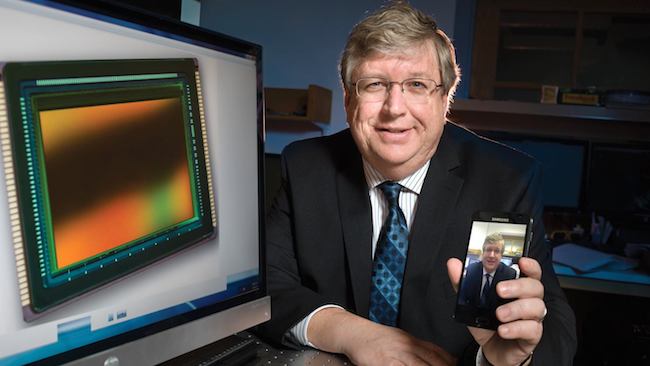

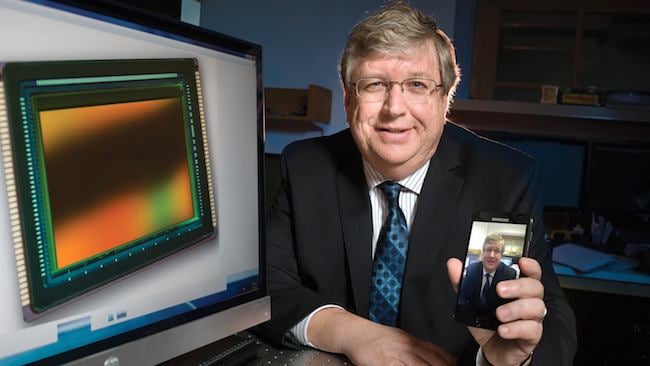

Eric Fossum: Counting photons and now counting awards

Eric Fossum: Counting photons and now counting awards

While it's likely decades off from practical use, a multi-national team of engineers just took home the Queen Elizabeth Prize for Engineering for a groundbreaking single photon camera.

There isn't a single global event like the Oscars for people who've made useful widgets, although there probably should be. The engineering world doesn't usually make nearly as much fuss about its achievements as, say, film and TV people. One thing that does happen every year, however, is the Queen Elizabeth prize for engineering, a million-pound award which "celebrates a ground-breaking innovation in engineering." Despite its firmly British origins, the 2017 prize has just been awarded to a multinational team in recognition of its work on imaging sensors over the last several decades.

Meet the team

One of the four winners of the 2017 QEPrize is Eric Fossum, a professor at the Thayer School of Engineering in Dartmouth College. He's interesting for several reasons, including some new thinking which we'll talk about it in detail below. Fossum already enjoyed considerable success as the principal inventor of modern CMOS imaging sensors, specifically developing a way to transfer the accumulated charge away from each pixel, representing a considerable advance on the then state of the art, which had been formalised in the late 1960s.

The second awardee is George Smith, himself the co-inventor (with the late Willard Boyle) of the charge-coupled device which replaced vacuum-tube image sensors and was effectively replaced by Fossum's CMOS technology. Smith was a recipient of a quarter share in the 2009 Nobel Prize in Physics for his work on imaging sensors and holds an honorary fellowship of the Royal Photographic Society, among many other honours.

The third, Nobukazu Teranishi, is a professor at the University of Hyogo and Shizuoka University. His most significant work resulted in the pinned (not to be confused with PIN) photodiode architecture which forms the actual light-sensitive component in modern sensors. Teranishi previously worked for NEC and Panasonic and has been honoured by both the Royal Photographic Society and the Photographic Society of America, as well as the IEEE.

Finally, Michael Tompsett built the first ever camera with a solid-state colour sensor for the English Electric Valve Company in the early 70s. Thompsett has been a Fellow of the Institution of Electrical and Electronic Engineers since 1987. It is, to put it mildly, an exceptionally good group and the 2017 Prize will only add to its already glowing collection of accolades.

Counting photons

Fossum's most recent work is intended to improve the capture rate of imaging sensors – the proportion of photons they actually detect, as opposed to those which pass through unnoticed. This will improve sensitivity, noise floor and dynamic range. One of Fossum's papers, published last summer, talks about a quanta image sensor (QIS) involving a huge number of sensitive sites, each of which is intended to detect a single photon and to do so at very high rates. This would not, on its own, produce a useful image, since light is quantised (hence quantum theory) into chunks of energy – that is, photons – which are either present or absent and can't be subdivided.

An imaging sensor with the capacity to detect only a single photon would produce an image containing only single-bit pixels of full black or full white. The idea of the QIS is to use a very high resolution sensor, then average large areas of individual photon detectors, which they call jots, over a controllable time interval to create conventional pixels. Fossum's paper talks about sensors with a billion individual photon detectors which would be read at rates up to a thousand samples (not really frames) per second. Since both the spatial and temporal resolutions would be greatly divided down in averaging to produce a viewable image, such large numbers are unavoidable, although it would create a 125-gigabyte-per-second data stream if stored unadulterated. The paper does mention the need for schemes to reduce this to something more manageable.

There's also discussion of various approaches to averaging the binary photon data down into conventional pixels. A big part of the idea, in general, is to record the single-photon data at the time of shooting and to handle it later with intelligent processing. This could do all sorts of things, such as aiming to reduce aliasing in high-contrast areas (by averaging overlapping areas) while minimising noise in low-contrast ones (by averaging larger areas). We could take our time in arriving at an image that's arbitrarily attractive to humans. To some extent, this is already done on productions, such as music videos, which might shoot high frame rate 4K and deliver conventional frame rate HD, using the extra data to finesse motion and framing choices in postproduction, safe in the knowledge that scaling things down only increases image quality.

Why it's important

It's important to realise that the purpose is not specifically to create a camera which could shoot in incredibly low light levels, although it could, and nor is it about creating spectacularly high resolution, although again, it could. Much of the intent seems to be concerned with the flexibility of selecting post processing techniques to whatever the goal of a particular project might be. The paper discusses exposure response (similar to film, for various mathematical reasons) alongside noise and dynamic range and seems to be very aware of the things which make cinematographers and photographers happy.

Much as it's not our place to question the work of the Thayer School of Engineering, the only concern with this approach seems to be the potential for things (the camera, the subject) to have moved between these proposed one-thousandth-of-a-second, single-photon snapshots. In some ways, it beckons similar problems as those which occur with the sequential high dynamic range modes which have been implemented in existing cameras and this is mentioned in the paper. Much depends on the effective duty cycle of the sensor and how much it misses between snapshots.

There is no unequivocal claim that any of this is yet buildable. Single-photon avalanche diodes (SPADs) are mentioned and the paper reinforces the idea that any reasonable candidate would need to be manufacturable on the sort of production lines used for current CMOS sensors. Either way, we're certainly years – a decade, maybe two – from seeing a camera with a little holographic "Quanta Image Sensor" logo on the side, but it might be worth the wait.

Tags: Technology

Comments