In our continuing feature, Red Shark Technical Editor, Phil Rhodes, delves into the complexities of the Academy Color Encoding System (ACES). Part Two looks at how the system works.

This is the problem that ACES attempts to solve, and the process is therefore one of standardisation. Camera manufacturers are encouraged to provide what the Academy calls an input device transform (IDT), which takes the output of the camera, usually with the proviso that the camera is in a known configuration, and transforms it into the ACES internal working colourspace. This requires information from the camera manufacturer (or, possibly, some very careful work with test charts) because the ACES colourspace is intended to represent the actual lightlevels in the scene, as opposed to a more normal image storage regime which might store values that would produce a pleasing-looking image if routed directly to a display.

ACES therefore stores images in a way that the standard calls scene-referred, but which might also be called linear light, a term we've used before in discussions of linear and logarithmic luma encoding. Completely linear image encoding like this is fairly wasteful of data space, but it is nicely suited to being manipulated later and certain types of computer arithmetic, which we'll discuss below, actually suit it quite well.

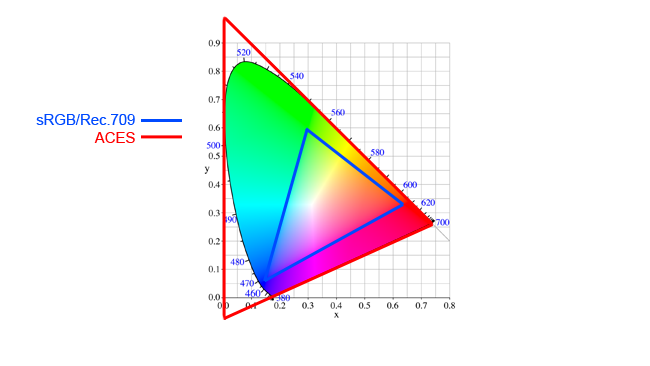

The other crucial thing about the ACES colourspace is that, unlike sRGB or Rec. 709 or even Adobe RGB, it is capable of representing all the colours that the human visual system can detect. RGB-based colourspaces define a triangle on a CIE diagram, with the primaries at the corners. The result is, broadly speaking, that colours within that triangle can be represented by that colourspace. ACES defines RGB primaries (or, well, sort of RGB primaries, about which more below) such that the resulting triangle covers the entire visual area of the CIE diagram. Rec. 709 and sRGB, which use the same primaries, are far short of being able to represent everything we can see. ACES, on the other hand, can represent everything we can see, and many things we can't.

It's important not to get too giddy here – the fact that a camera system has an ACES-compatible output mode (which is to say there is an IDT for it in a given mode) does not mean it can record everything inside the red triangle. Things outside the coloured horseshoe aren't really colours in the conventional sense. But ACES itself is capable of representing anything we can shoot now and, hopefully, anything we could usefully shoot in future, in a consistent and standardised way. Just to make this clearer, let's look at an example of what “out of gamut” really looks like.

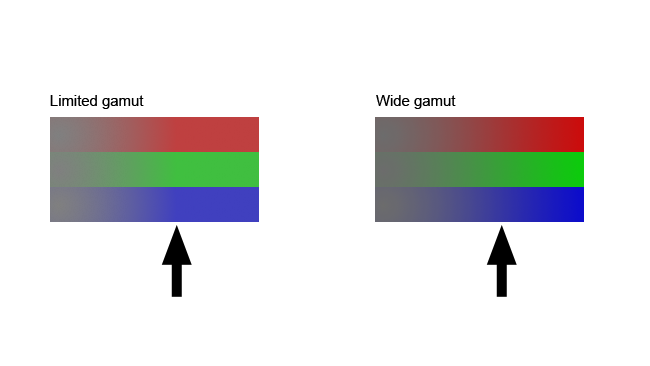

The effect has been emphasised for clarity here, but you might still have to look closely. In both colour blocks, the saturation ramps up from zero at the left, to 100%. With the limited-gamut example on the left, the colours stop getting more intense roughly at the point where the black arrow is. On the right, they don't just get brighter: the colour actually gets deeper and more saturated to the right of the arrow. The peak red is more ruby; the deepest blue reaches more toward the indigo. This sort of clipping is more subtle than clipping of overexposed areas, but it's still a bit ugly.

Things to be aware of

So, the expanded gamut of ACES (as well as things like Adobe RGB, Kodak ProPhoto, and others) are great. There are just two things to be aware of. First, the notional “red”, “green” and “blue” primaries that ACES defines are not, with the possible exception of the red, real colours – they exist outside the human visual range, in a mathematical sense only. The blue, in particular, actually has a negative coordinate, meaning it's quite literally off the chart. Even so, the fact that they are still notionally red, green and blue in terms of their position relative to the real, viewable colours they encode mean that things like grading operations behave as they would in any other RGB colourspace, even though it will never be possible to build a monitor capable of displaying the ACES colourspace natively.

The other issue is that because the range of colour is so wide, values must be stored with extra precision. Eight-bit images can store 256 levels of red, green or blue per pixel, which looks OK when we're encoding a Rec. 709 image, where the difference between colours is limited by the standard. Scale that up to an ACES image, however, and the graduations of colour would become far too coarse, especially if we also consider the need to modify the image with grading and other operations which reduce precision with the inevitable small mathematical errors of digital arithmetic.

Because of this, the Academy has specified that images in the ACES space should be stored as 16-bit half-floats, which are a binary number format which trades off the precision with which large numbers are stored against the ability to store them at all; it is a compromise between the large size of larger numbers and the limited range of smaller ones. When ACES data is stored – usually in OpenEXR format – it'll therefore be twice the size of otherwise equivalent 8-bit data. It doesn't seem to really be intended that cameras record ACES data directly, rather recording a more conventional 10-bit log encoding which is suited for later conversion to ACES data, so it's perfectly valid to use a 10-bit recording and work on it later in ACES.

In the third and final part of this piece, Phil Rhodes delves into the complexities of the Academy Color Encoding System (ACES) and we look at how it's working out in the real world.

Tags: Technology

Comments