iPad Retina

iPad Retina

More than anything, Apple has been responsible for raising the resolution of our displays, and this is a good thing

Apple has been responsible for pushing the envelope, not necessarily of what is possible, but of what consumers expect. They've set standards for other companies to match or exceed. It's not so much a matter of originality as of what you might be tempted to call arrogance, but, which you might more accurately (and less emotively) call justified confidence.

It's happened several times. Think about the first generation of iMacs, which dropped floppy drives. Think about the iPhone. Nothing like it had ever been tried commercially before, and yet, within a year or so, most phone companies had a product that was a black rectangle with virtually no visible controls.

The same happened with tablets. I've owned a couple from the pre iPad days, and they were, collectively, a dog's dinner. Apple doesn't always get it right: I still have an Apple Newton Messagepad 2100, which still works perfectly, which is to say not very well. But, considering it had 2 megabytes of RAM, amongst other underwhelming specifications (but still cost £700), it did well to work at all.

When the iPad came out, not only was it a clean and simple-looking device, it had one deal-making feature - you didn't have to use a stylus. Everything was geared towards using a finger as the pointing device. That changed everything.

But while all these headline-grabbing products made us look at and for products in a different way, there's one that is almost invisible to the casual viewer, but which is almost as big a revolution as all the others put together: high screen resolutions.

I've always paid close attention to screen resolutions, and, until recently, they've always seemed inadequate. In the early days of personal computing, having too detailed a screen would have been over taxing for the feeble processors that powered our laptops and desktops. So it was probably a good thing that we didn't have Quad HD screens back then. Phones either had four lines of LCD dot matrix, or extremely small colour screens, which started appearing around the beginning of the last decade (around the year 2000).

PC resolutions stayed around XGA an agonisingly long time. You could get higher resolutions, but only with expensive and exotic graphics cards. XGA is a terriblly low resolution for media applications. Digital audio workstations and NLEs struggle with a paucity of pixels.

Around ten years ago we started to see full HD screens, which were a big improvement, of course, but if you look at a 24 inch computer monitor running at 1920 x1080, you don't have to look very closely to see individual pixels making text look blocky.

And then came the "Retina" display on the iPhone. This was Apple making waves. It didn't just make the resolution a bit better; it doubled it (ie doubled the linear resolution by quadrupling the number of pixels).

"Retina" moved to Mac Pros (I'm using one now) and to iPads. More recently it's moved to the iPad Mini, which has a stupendous resolution on a quite petite display. And just a few weeks ago, Apple revealed their 5K iMac, with a simply beautiful 27 inch display, where, at normal distances, and without a magnifying glass, no pixels are visible at all.

I've been using an iPad Mini Retina for a few days and I have to say it's a revelation, and not just because I can't see the pixels. It's actually because of all the extra detail I can see, and because of the lack of aliasing.

The detail is obvious. You can see it particularly in serif typefaces, where all the little curls and overhangs aren't merely hinted at: they're actually there. It is more like reading with paper than reading with paper.

But it's the lack of aliasing that makes the biggest difference. If you're not sure what I mean by "aliasing", here's a brief explanation.

Imagine a vertical line, one pixel wide. As long as it remains vertical (or horizontal for that matter) it will look perfect, because it's made from a line of pixels, one on top of the other.

Now, imagine that same line, but this time, not quite vertical. What happens? How is a display that is made up from square pixels supposed to deal with that? Mostly, it doesn't. If you shift the base of the line one pixel to the right, the only way to depict it is to show half of the line as a column of vertically stacked pixels, and the second half of the line as a vertical stack of pixels but one pixel across. In other words, you get two lines, with a break in the middle. If the line leans slightly more, so that it traverses more horizontal pixels, it will be broken into more discrete vertical lines.

The effect of this is, frankly, horrible. But the more pixels you have, the better it gets, and at Retina resolutions, it is very good indeed.

There are ways to "anti-alias" the problem, but it's not ideal. You have to calculate intermediate pixels at half-shades. It does a good job, and gets rid of the worst of the visual nastyness, but it doesn't provide any extra detail. Only Retina displays can do that.

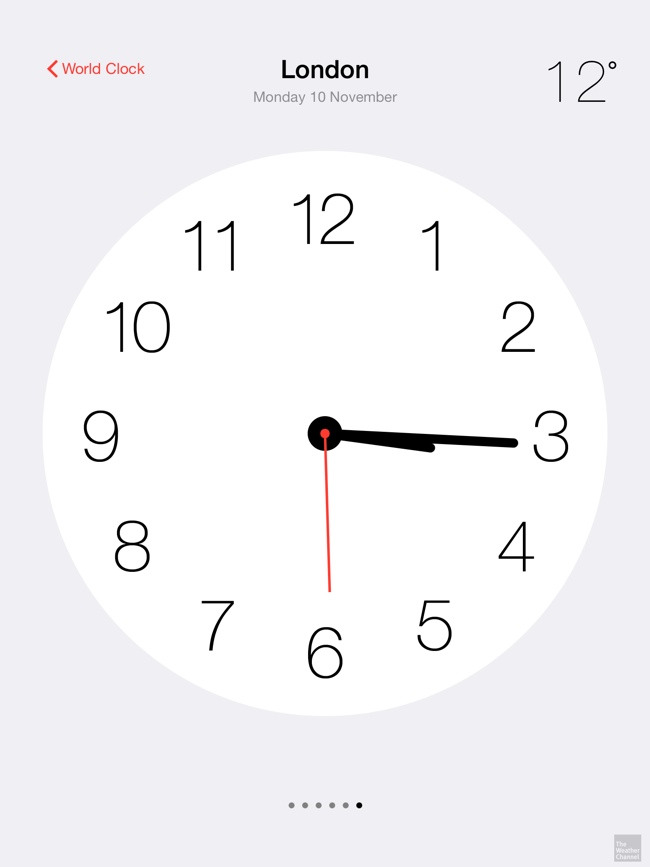

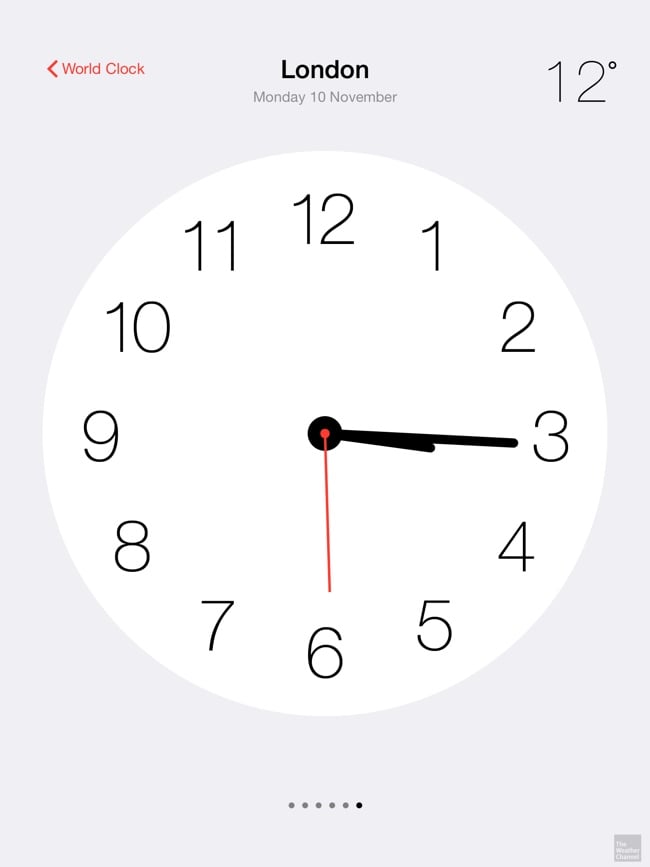

The iPad comes with a "World Clock" app, which has a number of traditional, analogue clock faces, complete with a thin red moving second hand. On a Retina display, you can't see any aliasing whatsoever. If you look at the image, you can see that there is some artificial antialiasing in play here, but the combination of this and of the high resolution results in some of the smoothest, cleanest diagonal motion I've ever seen on a screen.

All of this has made me wonder what high resolution means for video. Obviously, if a diagonal line is captured with a lower resolution sensor, nothing apart from anti-aliasing or a low-pass filter (which is what you can regard most transmission and compression systems as) is going to smooth out the notches in the diagonal line. But with higher resolutions, the pixel grid is smaller, and lines like the second hand on the clock will looks smoother.

And in a way, this is quite a profound realisation.

Because almost nothing in nature is a straight line. And when there are straight lines (like the horizon - yes I know it's curved but from our usual viewpoint it's straight) they are rarely aligned with the pixel grid.

That means that everything we watch suffers from aliasing to some extent, and therefore everything we watch could benefit from a higher resolution. So far, so true, and, it has to be said, so obvious.

But think about this:

If a thin diagonal line can display aliasing even on a retina display, what we're seeing is visual distortion on a screen that's not supposed to show any visual distortion. Diagonal lines magnify the downsides of having a rectangular pixel grid.

Most of nature, as we've said, is not a straight line, but when you zoom in to curved objects, at some point, small sections of the curve can resemble straight lines (isn't this how Calculus works?). And at that point, they will show aliasing as well.

Where is this train of thought leading?

Well it may be that, just as there are benefits to sampling audio way above the point where audible frequencies will be reproduced, it might be that extremely high resolution video - even though we're way past the point where we can discern individual pixels - will add a somewhat intangible but significant sense of quality to an image.

Tags: Technology

Comments