Replay: Cables are one of those items that quite often appears way too overpriced for what they are. But in video, there's much more to that piece of wire than you think.

As long as there have been cables, there’s been someone willing to take a cable, dip it in liquid nitrogen and polish it on a maiden’s knee, then sell it for 17 times the going rate. This sort of chicanery serves to make most people cautious about different and expensive cables bearing extravagant claims of specialness, usually quite rightly.

Perhaps the only really widespread consumer deployment of fibre - and though many CD and Minidisc players have it, it's not clear how many use it.JPG

It’s taken until quite recently to create a situation where the need for careful cable engineering has started to reach a peak, even for comparatively everyday links between a camera and monitor or vision mixer. We’ve been reliant on coaxial cables for decades to create reasonable-quality links between pieces of video equipment, even right back to the days of analogue standard definition video, but coax had become a straightforward and well-established technology by the time SDI appeared. The first digital video protocols were designed specifically to allow existing studio infrastructure, based on the same cabling that had been used for analogue video, to be used with SDI.

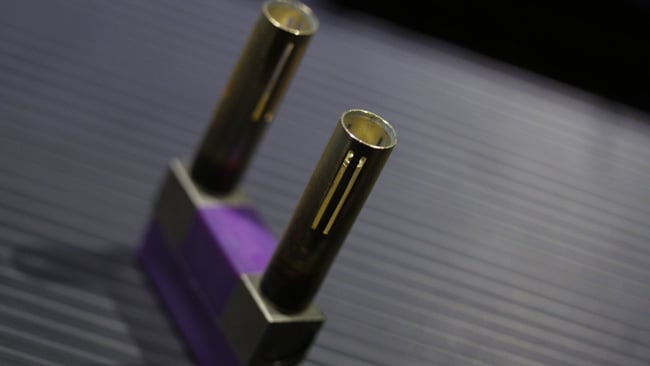

The trusty BNC is good to perhaps a few gigahertz, with appropriate electronic compensation. More requires lots of cleverness

With HD-SDI, it’s pretty common to hear that special low-capacitance coaxial cables are required. Anyone who’s tried it will probably be aware that ancient, 1970s-vintage cables intended for component or composite video will work perfectly well, at least over short distances (it’s supposed to go 100 metres under ideal circumstances, which is significantly less than a properly engineered composite video link will do, though that’s a natural enough concomitant of the extra resolution). In the end, all video cables are designed to have a 75-ohm impedance (a concept which I discussed in detail here.. If we know roughly how impedance works, it should be fairly clear why low-capacitance cable makes fast signals more intelligible, extending the range.

And SDI is fast. Due to some idiosyncrasies of how the underlying format was designed, the frequency of the signal that’s going down the cables is more or less the same as the bitrate, so standard-def variants specified at 270Mbps had signal frequencies around 270MHz. That’s not too scary, though HD variants tend to hit roughly 1.5 and 3GHz respectively. At that point, receivers must usually implement special measures to provide active cable equalisation and amplification and, with that in place, coaxial cables with BNC connectors are regularly used at frequencies up to several gigahertz.

The ADAT light-pipe specification uses the same connectors and fibre as TOSLINK and is still just as overspecified

Demands, though, keep increasing. We’re now regularly seeing 12Gbps SDI deployed in support of high frame-rate, high-resolution material at high bit-depths and, in practical circumstances, it tends to be rather less reliable than slower standards, especially over long distances. This won’t be a surprise for many electronics specialists who might start sucking air through their teeth at any suggestion of putting signals this fast down a flexible copper cable at all. Questions over whether we need yet more, for 8K high frame-rate, high dynamic range material, are for another day, but there are some quite serious questions to answer about how that need will be met if we want to do it.

Optical fibres are already widely deployed in specific areas of television infrastructure but uptake has been, perhaps surprisingly, slow, especially given it is not as new a technique as we might assume. The earliest research was done in the 1960s, following on from earlier, more conventional optical applications of very thin pieces of drawn glass. Perhaps the most widely deployed application is (or was) Toslink, the digital audio interconnection protocol developed by Toshiba, which really didn’t demand anything like the bandwidth of which even thick multi-mode fibre was capable. Since the days of Minidisc players, though, we’ve seen more than a few attempts to deploy fibre optics into both domestic and professional technologies that haven’t really gone anywhere. Thunderbolt, for instance, was originally called Light Peak and conceived as an optical format.

MUSA patch bays were mainly designed for standard definition video and could just about be pressed into service with SDI. Is anyone still using these?

Fibre has seen success in very specific circumstances – as long-distance underwater cables and on outside broadcast trucks where weight and distance are of concern. In the main, though, it’s struggled, particularly in applications just like Thunderbolt where there’s a desire to send power over the same cable, which is possible in a mixed glass-copper construction but massively complicates manufacturing. Costs in the equipment that uses these cables are also increased, simply by the need to translate what are, inevitably, very fast electrical signals into optical ones and back again at each end of the link and optical cables can be slightly fragile. In 2019, it’s still quite common to have entire facilities without a fragment of fibre anywhere but the computer networks, which is probably not what the futurists of 20 years ago might have predicted.

Still, if we’re going to start demanding 8K desktop monitors with 120Hz, 10-bit, un-subsampled RGB signal formats to suit, the technical realities might well conspire to put fibre optics into much more use, because the BNCs and coaxial cable we’ve been sweating for years are frankly starting to run out of performance.

Tags: Production

Comments