Replay: When you are next bemoaning a particular camera's colour performance, spare a thought for what's involved in getting that colour to the screen in the first place. It's complicated.

Ordinarily, it’s not a great idea to work on the basis that high-end cameras have some sort of magical capability that causes anything put before them to look wonderful. As is famously the case, the last few percentage points of improvement in any piece of engineering can cause the price to skyrocket. It’s also very easy to overlook the fact that the best-funded camera departments are most likely to be found on productions with the best-funded production design, which does make a big difference.

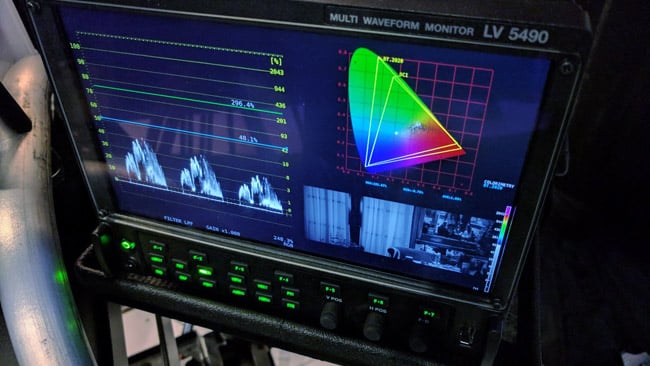

The outer yellow triangle is Rec. 2020. Nothing can actually cover 2020 – it's formally impossible, but various cameras have more or less saturated dyes

With all that in mind, my subject for today is a characteristic that’s difficult to measure but often strongly suspected of being present mainly in higher-end cameras. It’s an engineering compromise that trades off sensitivity and, to some extent, resolution, for rich, sensitive colour reproduction on Bayer sensors, and in that sense, it’s an aspect of high-end cameras that actually does make things look nicer.

Most people are familiar with the idea that sensors in modern cameras have a pattern of red, green and blue filters on them and that various, often proprietary, algorithms are used to reconstruct a full-resolution image. What’s important about this and almost never discussed, is the exact choice of colour dyes that are deposited on the sensor at manufacture. That choice clearly has profound and permanent implications for the capability of that sensor and it isn’t necessarily all that obvious as to what the best choice is.

An obvious choice?

The most obvious choice would simply be to pick very saturated colours, so the camera can see the difference between colours very clearly. To put it another way, that would put the camera’s primaries close to the edge of a CIE diagram. Just like a monitor with really saturated primaries is able to reproduce a wide range of colours, a camera with really saturated primaries can see a wide range of colours. Pick too pale a set of filters and a very saturated object will simply be seen at maximum saturation and, all things being equal, rendered as a paler shade than it really was. Real-world pictures tend not to have much extreme saturation in them, but there are notorious examples of things that do, like tropical water and LED lights, and we’d like to be able to record them properly.

Ursa shoots Venice

Unfortunately, if we use the very saturated filters then it costs us two things. For a start, the filters on a Bayer sensor are conventional filters; they absorb everything they don’t pass and that light is lost. If we use deeper colours, they absorb more light and the camera becomes less sensitive overall. That’s a fairly simple relationship to understand and it’s the first part of the engineering compromise we’re talking about.

The second part is slightly more complex and it involves the way that Bayer sensor data is converted into a finished frame. If the sensor is imaging a white (or at least colourless) object, that object will be seen by all three sets of colour channels and the mathematics can take advantage of that to ensure that the resolution with which we see that object is somewhere quite close to the resolution of the whole sensor. For colourless objects, the resolution of a (say) 4K Bayer sensor can get surprisingly close to 4K.

For brightly-coloured objects, things are different. With very saturated blue and green filters, a very saturated red object is only really visible to one in four photosites on the sensor; your 4K camera has just become a 1K camera. For this reason, most Bayer sensors use less saturated dyes which overlap so that (at least) the green-filtered pixels will see a little of that red object and possibly a hint of it in the blue as well.

Sing along now - red and yellow and pink and green, purple and orange and bluuuuue

That allows the mathematics to do a much better job of reconstructing a full-resolution image, but it makes colours harder to see. The resulting image is low in saturation. It’s possible to add more, of course, using broadly the same sort of process as turning up the saturation control in Photoshop. Usually, the approach is tweaked to suit the behaviour of the camera in question, which broadcast engineers would call it “matrixing”, where the input red, green and blue become the output red, green and blue as multiplied through a table of constants. Cinema camera manufacturers call it “colour science” and it can get quite complicated, but it’s mainly a matter of recovering realistic brightness and saturation from Bayer data without making things look odd.

It is, inevitably, an imperfect process. At some point, if the colour filters overlap a lot, it becomes ambiguous what colour things really are. The human eye works the same way and, in theory, has the same problems, but the way in which filters, mathematics and the human brain interact can lead to complicated problems, like certain cameras being unable to tell blue from purple reliably, as we discovered in March 2018.

LEDs, such as this simulated neon strip, can achieve hugely saturated colours. Many cameras just can't see it

All of which means that making Bayer cameras see accurate colour is a difficult thing. A camera which chooses to use denser dyes might see better colour, but might also suffer reduced sensitivity and, from a commercial point of view, that’s a tough call. Effective ISO is an easy number to promote. Colour accuracy is much harder. Yes, cameras might be advertised as supporting a certain colour space, but that generally refers to the approximate coverage of the sensor after processing and the encoding used in the output files. There is no simple, numeric, promotable measure of colour performance in cameras.

So, to go back to our opening thoughts, will all of this make the difference between an image looking like a soaring cinematic great and an embarrassing snapshot? Nope, but it is detectable and it is visible, and it’s possibly one of the reasons that some less expensive cameras are often faster but somehow lacking the fundamental richness of their more-expensive cousins.

Tags: Production

Comments