Modern sensors are capable of incredible feats of image capture. One of the biggest developments is dual gain architecture. But what is it, and what does it do?

Most people are more or less aware of how a modern imaging sensor works. Photos come in, run into bits of silicon and get turned into electrons which are then moved out of the sensor into the electronics that turn them into, say, a ProRes movie file. That's actually a reasonably accurate, if very abbreviated, overview of how it works, though current cameras have started to exceed what can be done with the simpler approaches. High frame rate, high dynamic range and high resolution add up to a lot of information that's hard to handle.

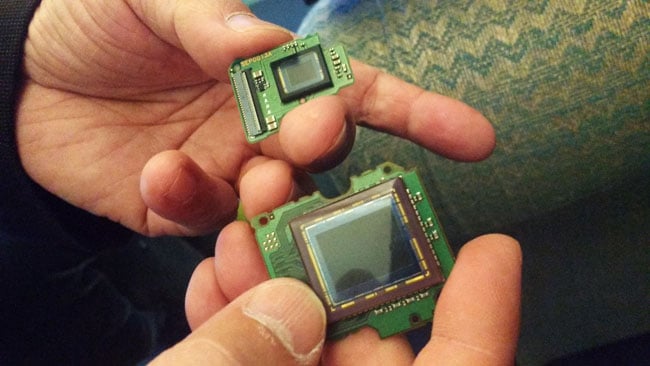

Imaging sensors in their natural state

This might turn out to be the start of an irregular series on the developments in sensor tech and how modern cameras achieve such astounding results.

Dual gain technology

Our first subject is the technology referred to as dual gain readout, perhaps most famously applied in Alexa's ALEV3 sensor. Great as it is, the Alexa sensor is no longer at the bleeding edge of development and dual gain is increasingly seen on other cameras too – particularly things like the Fairchild LTN4625, which is (presumably) the sensor used in Blackmagic's 4.6K cameras. Dual gain readout is a big part of how modern sensors achieve such high dynamic range.

Ursa Mini 4.6K sensor with dual-gain readout

The problem is that the actual signals created by photons hitting the sensor are tiny and can't be digitised directly. To put some numbers on it, one pixel (or more properly photosite) on an LTN4625 can contain about 40,000 electrons before it hits maximum value and clips. A current of one amp represents 6.2×1018 electrons per second, which is – um – well, it's at least an 18-digit number. The signal from an imaging sensor is small, so we need amplifiers.

In most designs, it's at this stage that things like the camera's ISO sensitivity are set. The silicon, after all, converts photons to electrons in a way that's fixed and controlled by the laws of physics; there's no way of making the camera more or less sensitive in the way we'd change film stocks. Sensitivity is controlled by amplifiers that are generally part of the CMOS imaging sensor itself. Those amplifiers need to handle tiny signals without adding interference which would create noise in the image.

Fast Amplifiers

What makes that particular is that these amplifiers also need to run very, very quickly. The LTN4625 has roughly 12 million pixels on it, and Fairchild's specification suggests that it will shoot up to 240 frames per second depending on the sort of device it's built into. That's nearly three billion pixels a second (2,866,544,640, to be precise), each of which must be handled with enough precision that it can be digitised with high precision and not blurred into the signal of its neighbours. All of that happens on the imaging sensor itself, but it would be essentially impossible to do that if all the pixels were piped through one point.

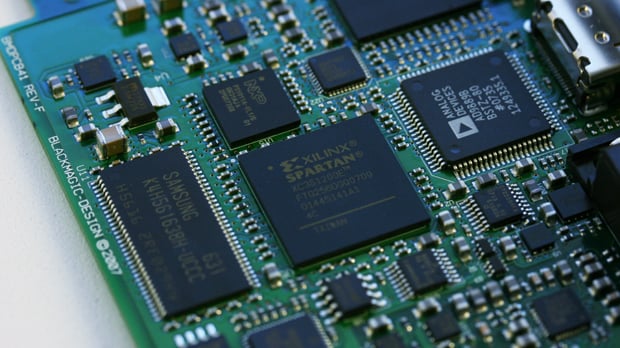

Most of the fundamental image processing, including amplification and digitisation, is done on the sensor these days - not in external electronics like these

The Fairchild sensor we've been using as an example, the LTN4625, has separate amplifiers for each of its 4,600 column of pixels, but even then it's very difficult, practically speaking, to make an amplifier that's fast and quiet and has high dynamic range. It's much easier to connect that same signal to two amplifiers, one of which looks at the low energy (“dark”) parts of the signal and one of which looks at the high energy (“bright”) parts of the signal. It's rather like shooting two images of the same scene at different exposures for a high dynamic range still, but with the advantage that it was all captured at the same point in time, which is, of course, essential for moving image work.

This approach does mean that quite a lot of electronic calibration is required to make the images look good – the combination of the “bright” and “dim” data needs to be managed carefully. Often, that's part of the job done by a lens-capped “calibrate” cycle on a modern camera.

![]()

Sensors on silicon wafer courtesy Dave Gilbolm - Alternative Vision Corporation

And that's how we make cameras with practically supernatural dynamic range.

Tags: Production

Comments