Sony's VENICE produces a wonderful picture, but does the large chip improve picture geometry?

Sony's VENICE produces a wonderful picture, but does the large chip improve picture geometry?

We know big chips are better for light capture and dynamic range. But does a larger chip produce a quantifiably better picture geometry than a smaller one? Can we even define what those extra qualities are? Phil Rhodes investigates whether it can be proven, or whether it is all just hot air.

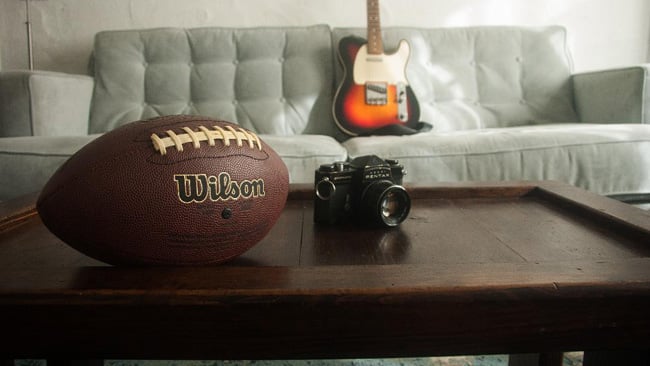

The images accompanying this article are used by kind permission of director of photography Stuart Brereton. Comparison images were combined by the author. All images have been graded to minimise colour and contrast offsets which are not the subject of this discussion. Framing differences due to tiny errors in the stated focal length of lenses and different placement of the mounting thread have been adjusted in the comparison images. The images are his, the conclusions and any errors, are mine.

How exactly do we define roundness, as the term is currently being used in discussions of large-format cinematography?

The word is currently being thrown around a lot in many forums (by which we mean “venues for discussion,” as opposed to the more literal “web forum.”) It's being used as if there's a universally agreed definition, which a quick Google suggests there isn't, but that's only part of the problem. This sort of discussion tends to degenerate into increasingly vague, ill-defined, beret-wearing and determinedly artistic assertions. Those are that equivalent images shot on larger or smaller sensors have subtle but powerful differences that make the larger sensor look – well – better, different, rounder? To state my position up-front, I don't think that's true, or at least not to any extent which would offend the temperament of even the most exacting director of photography.

Bigger chips handle light better

What's certainly true is that bigger chips make a difference, which is something we have talked about endlessly, as have a lot of other people. It's well enough known by now, but let's recap the good and justifiable reasons why manufacturers are doing this: bigger chips have either bigger pixels or more pixels. Bigger pixels (properly, photosites) are more likely to have photons hit them, so they're more sensitive and they can hold more electrons, so they have more ability to record bright highlights without ugly clipping.

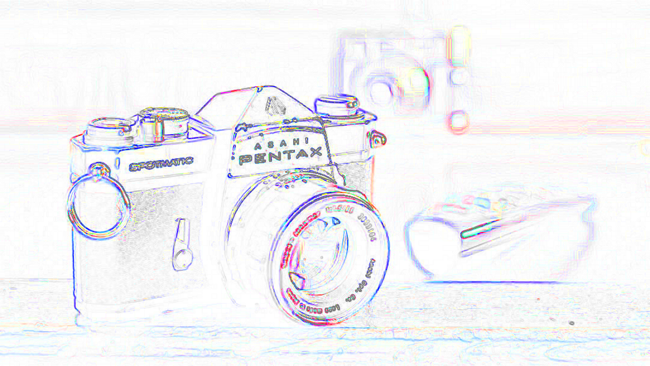

Canon 6D FF. 50mm lens @f4

Fuji XE-2S APS-C. 35mm lens @f2.5

Fuji (blue) and Canon (red) images with edge detection, overlaid. Red shows where the Canon does not precisely align with the Fuji. The two images are highly congruent.

Cameras such as Sony's Venice tend to have some combination of more, bigger pixels, and while excessive sharpness can be a bad thing, softening filters exist for a reason and the choice is nice to have. Averaging together pixels reduces both noise and resolution, though, so assuming no change to the underlying technology, the choice between bigger or more numerous pixels can be something of a zero-sum game. The shallower depth of field is not always a good thing. In fact, people seem to be overlooking just how shallow big chips get and how hard that can be to deal with, but let's not get into that, because it's a matter of opinion and of situational specifics.

Picture geometry

So, big chips are nice things to have and a popular idea, as the existence of cameras such as Alexa 65 attest. What's less obviously true is that sensor size makes any difference to the geometry of the image, absent other concerns such as depth of field and lens distortion.

Now, we should stop there and consider the fact that what's being discussed here is an almost completely synthetic situation. The question that seems to be asked is whether the bigger chip gives different results in otherwise equivalent situations. What comprises an “equivalent situation” is a matter of opinion, but it's often taken to mean a matching field of view and depth of field. Achieving that match on two different sensors requires two different lenses and different light, different sensitivity or different shutter timing because the aperture setting will be different to achieve the same depth of field.

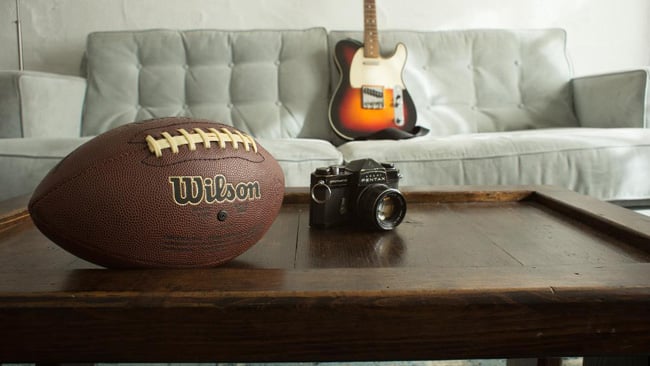

Canon EOS 6D. Vivitar 28mm prime @ f8

Canon EOS 10D. Sigma 18-55mm zoom. 18mm @f5.6

Canon EOS 10D (blue) and 6D (red) images with edge detection, overlaid. Red shows where the 10D does not align with the 6D. Differences are larger but consistent with barrel distortion.

This already encourages us to overlook things that are not, in any practical reality, likely to be easily overlooked. Let's assume we want the same motion rendering from both sensors (so we don't want to play with shutter angles.) Let's even ignore the fact that we might well have picked the larger chip specifically because we wanted less depth of field. Achieving the same depth of field on an 8-perf stills frame, or at least the 16:9 image area of an 8-perf stills frame, will need much more than twice the light or sensitivity than a super-35mm frame. This isn't a level of flexibility that's easily available to most productions. Beyond that, crank up the sensitivity and we might just have given away some or all of the image quality benefits that our bigger sensor gave us in the first place. Again, these things can become a zero-sum game, because physics is physics.

Synthetic tests

But let's overlook all those things and see if we can make this synthetic test work. Synthetic tests are OK, so long as we understand what we're testing for and how the test relates to the real world. So, if we set up a camera (it can be a stills camera) to have the same depth of field and the same field of view on a larger sensor and a smaller one, can we see any difference between the two images?

Um... well... at the risk of coming off as a philistine, no, not really, at least not beyond the fact that these images, that we're trying to make equivalent, are shot with different lenses on different sensors at a different stop in different lighting conditions or with different sensitivity. Different lenses have different characteristics, especially if they're designed to cover different sensor sizes, and may be of a completely different design. We shouldn't ignore the differences – they are there, they are visible and they might make a difference to someone. But we should understand why they're there, and not get too excited about some mysterious, ill-defined characteristic of big-chip cameras that may not exist at all, and if it does, really serves only to distract us from much bigger issues that make a much bigger difference on most productions.

For what it's worth, many discussions of this topic have returned to the word “roundness,” which, if we look at it dispassionately, seems to refer very broadly to the opposite of “flattening,” the effect produced by shooting things on very long lenses. It's no great stretch to say that shooting facial close-ups on wide lenses has long been held to be a bad idea since it tends to exaggerate the size of the nose and distort the features. This would make “roundness” a bad thing, so perhaps that isn't what's being discussed here. At risk of seeming unnecessarily argumentative, it would seem like a good idea to figure out what it is about an image that we actually desire before we try to test out ways of achieving it. Then, perhaps, it would actually be possible to have a sensible conversation on the subject and design appropriate tests.

But that's hard to do, so get ready for a lot more hot air on the subject of how big chips just look great, somehow.

Tags: Production

Comments