Motion capture has been one of the prime enablers of modern CGI sequences within both film and video games. If you've ever wondered about the complexities of the process, Phil Rhodes reports back from a session he observed at Shepperton Studios.

Most of the people reading this will be aware of what they're looking at here: a performer working in a motion capture facility. This is Centroid's enormous 24 by 10 by 7 metres capture facility, which has recently been so busy it has occupied L stage at Shepperton Studios for the last few years. The company boasts credits on a shopping list of big shows from Spectre to Mad Max, Godzilla, 300 and many others.

Centroid's motion capture system on Shepperton's L stage

The Shepperton facility is probably one of the largest motion capture setups in the UK, large enough to cater not only for work on the ground but also people flying through the air as necessary. We got to investigate on a day when the facility was being used by students from a visual effects course at the National Film and Television School.

It's a large facility

There are a lot of ways to track the motion of a point as it moves through space. That's the first stage of recreating the motion of an actor (or, equally, another object) — working out which point represents which part of the tracked object is often a job for later. Magnetic markers moving around above sensing coils have been tried, resulting in something that works rather like a giant graphics tablet. Active-suit techniques put infra-red emitters on the performer and look for them using arrays of sensors. Mechanical encoding allows the performer to wear an outfit which detects the bending and flexing of joints. All of these techniques have different capabilities in terms of the maximum area the performer can work in, the precision with which motion is tracked and the ability to deconflict difficult situations, such as two performers grappling with one another.

Motion Analysis Eagle-4 camera

Centroid's facility uses what's perhaps the classic approach: infra-red cameras with near-infra-red ring lights designed to observe retroreflective markers on the performer. The advantages of this are many. First, the capture volume can be as large as the cameras can cover and more cameras can be added reasonably easily. It's absolutely referenced. There can't be any drift, as there can be with a mechanical device which must attempt to work out how far a performer has moved by calculating the results of their walking motions. It's also highly accurate: Centroid's crew weren't happy until the equipment was calibrated to an accuracy, displayed in the user interface, of less than one millimetre. Over a space 27m long, that's an accuracy of better than 0.0037%.

It doesn't matter which way up the cameras are - it's sorted out during calibration - so they're aligned to maximise coverage

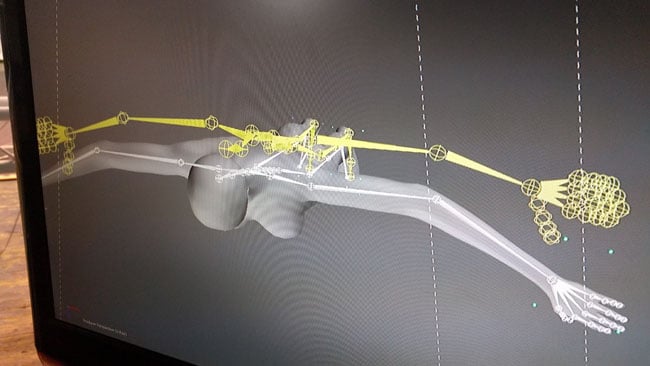

Each of the Motion Analysis Eagle cameras — up to 168 of them — is fitted with a near-infra-red pass filter to exclude visible light. Since the cameras will see each other's ring lights as well as reflections in any specular objects taken into the capture volume, the software provides the option to mask out small areas of the basic image to avoid conflicts. The cameras are of around 4K resolution, providing the basis for that high level of accuracy and signs warn passersby sternly not to lean on the truss which supports them, as any movement in the cameras will result in inaccuracy in the captured positions. Centroid likes to run the cameras at four times the frame rate of the intended scene, equating to a 100fps motion capture rate for the 25-frame project being worked on in these photos.

Both motion capture data and video of the live performance are captured in synchronisation

More than 100 4K cameras at 100fps represent a lot of data, which is immediately confusing because the cameras are all connected to the host workstation via ethernet, which certainly doesn't have enough bandwidth to stream all those images in real-time. To make it practical, the cameras provide only a single-bit, black or white image, with no greyscale, which is enough to achieve those high levels of accuracy. The raw one-bit image is recorded for later processing, although there's a real-time preview of the captured motion for the director's reference, optionally using a production-supplied mesh.

Cheryl gets suited up

The calibration process makes for an early start. Shepperton railway station is no place for humans at 0700 on a Wednesday morning, but there's quite a bit of work to do to achieve that sub-millimetric accuracy. For a start, the camera setup itself needs to be characterised, which involves walking the floor of the capture area with a special calibration target which has three of the retroreflective markers in an asymmetric configuration. During this time, the performer is equipped with one of those special, extra-unflattering motion capture suits, which must remain in place after a performer calibration process. It's important that the suit fits closely so that the markers don't move around and there's only very limited tolerance for the performer removing parts of the outfit, which comes as a jacket and leggings with lots of velcro connections since things might not go back in the same place.

Cheryl holds a T pose at the end of a take, for later reference

The corner of Centroid's stage is filled with general-purpose props and staging, specially designed for the motion capture process. Walls, for instance, are made out of mesh, so that performers can collide with and react to them, but the cameras can see through them. The more cameras can see each marker, the more accurately it can be located by averaging the apparent position as seen in each image. There's a sensible minimum of “several” cameras to locate a marker, but there's naturally a limit of observability if a performer ends falling on the ground.

A misalignment between the provided mesh and the size of the performer is visible here

Actually using motion capture data requires a pretty good up front idea of what the eventual character will look like. Scaling the motion data such that a (human) actor can perform for an entirely inhuman character introduces some extra complexity, but it's done all the time. In this instance, we can see a mismatch between the size of the observed figure and the size of the provided mesh, which will need to be solved before the final capture data can be created.

Watching a take replayed with a 3D preview of the final scene

There can be some post processing time, so it's common for the final data to become available a few days after the capture session, though it's naturally a much, much faster process than hand animation. The only downside, as Centroid's crew ruefully admit, is that unless there's any facial capture involved, there's rarely any point inviting the highly-paid star to a motion capture session — so equivalently-sized doubles are usually used.

Thanks go to Centroid, the National Film and Television School, and to performer Cheryl Burniston and director Virginia Popova for their permission to produce this article.

Title image courtesy of Shutterstock.

Tags: Production

Comments