Quantized rose

Quantized rose

Phil Rhodes updates one of the most popular articles we have ever featured on RedShark - 8-bit or 10-bit? The truth may surprise you - and provides a guide to bit depth for 2016.

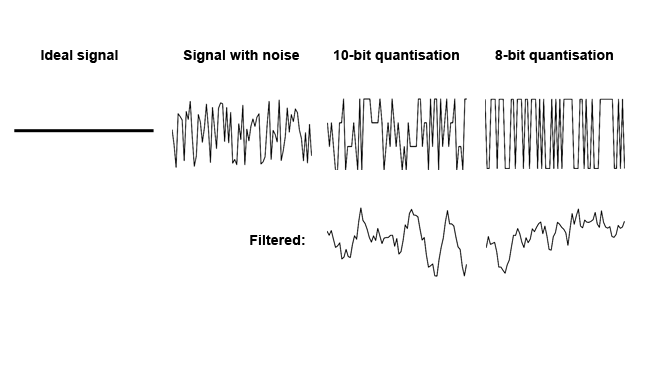

It's been a while since we first published a piece about bit depth, in which it was cautiously pointed out that many cameras have more than enough noise in their output to make any bit-depth more than eight fairly pointless in the sort of implementations that are most common. Understanding this is a relatively straightforward bit of information theory: if we are recording a signal that has a maximum value of (say, eight bits) 256 and a random noise of 1, there's no point in storing it as a ten bit signal. All we'd end up with is a stored value of 0 to 1024 but with a random noise of four values. Might as well store it as an eight-bit signal, with a range of 0 to 256 and a random noise of one value. If we're at least recording one bit's worth of noise, we can be sure that we're encoding absolutely everything the signal has to offer without using excessive storage or throwing away information

If the noise is larger than one bit, further precision is not useful

In the above, we see an ideal signal, that same signal contaminated with noise, and encodings of that noise at 10 or 8 bit. A simple filter (that is, a blur) makes it clear that we're not gaining anything by encoding noise with more bits.

A recap of log

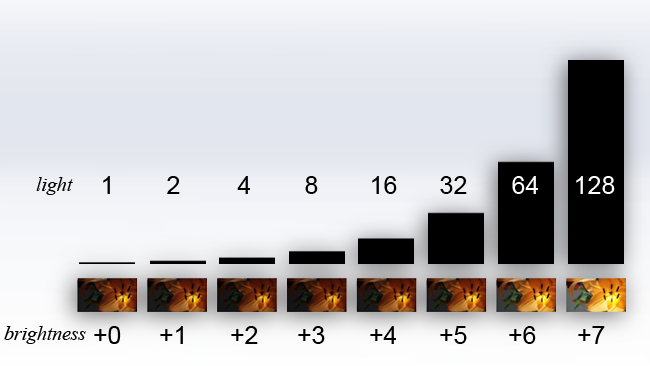

Sadly, it isn't quite that simple, because this is a subject that's greatly complicated by differing luminance encodings, especially if we're interested in HDR material which has more luminance information and therefore requires more bandwidth to store it. To recap this, the technical implementation of modern cameras is generally such that the data coming out of the sensor is a high-precision number – 16 bits or more – which is, very roughly and not in absolutely all cases, a linear representation of the number of photons that hit each pixel. Double the amount of light, and we double the numerical value for the pixel. It's simple, but that's not an efficient way to store visual information because it results in a different number of digital values being used for each F-stop. Because our eyes are nonlinear, each F-stop of brightness change looks like the same amount of alteration – the difference between f/2.8 and f/4 looks like the same amount of change as the difference between f/4 and f/5.6. But because each stop represents a doubling of light, f/2.8 is double the light of f/4, and four times f/5.6. It's (literally) an exponential increase.

The relationship between increasing the light, and what the results actually look like

If we store that image linearly, so that a doubling of the photons which hit the sensor results in a doubling of the digital value stored, we end up using the entire top half of the available digital range to store just one stop's worth of information. In an 8-bit signal, all of the information between peak white and one stop darker than that would be stored using the entire upper 128 values of the 256-value range. Anything one stop below that is stored in a 64-value range. One stop below that, and it's a 32-value range. Go down again, and we have only 16 shades of luminance to represent an entire photographic F-stop of information. The bottommost stop, eight stops below white, is either on or off: it's represented by a single value. Now, no real camera system works like that – they use way more bits when working on linear images so as to accommodate the large range of values with workable precision. To us, though, with our nonlinear eyes, each of these stops looks like the same amount of information, so it quickly becomes clear that we are using way too much data to store the brightest parts of the picture, and way too little to store the darkest.

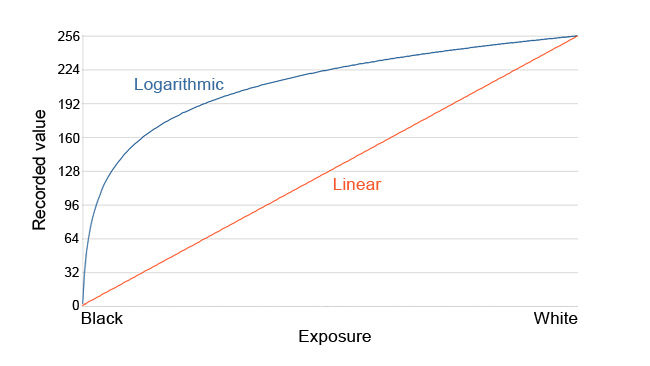

Logarithmic versus linear encoding, viewed as it would be in Photoshop's curves filter

We work around this problem by mathematically manipulating the image data. Very few things – with the exception, sometimes, of raw camera formats – store linear data. Increasing the values used to represent darker areas of the image, and shelving off that increase toward the highlights, is a solution. Think of it, roughly speaking, as dragging the middle of a Photoshop curves filter upward. A true log curve is designed to make all of the F-stops of information occupy the same number of digital values.

Implications for bit depth

So, what does this have to do with the bit depth required to adequately store an image? Well, if we worked on the assumption that we were just storing the linear data, we could easily observe the signal coming out of the sensor, measure its noise, and work out how many numbers we needed to range between the minimum and maximum values with a precision at least equal to the random variation due to noise. But if we take that high-precision sensor data and brighten the shadows (where noise tends to lurk) due to logarithmic encoding, things are more complicated. If we make the luminance encoding nonlinear, we also make the noise profile nonlinear; amplify the shadows, and they look noisier than the highlights, so we will always end up using more bits to encode shadows than the precision of the signal really demands. This is why people look at log signals out of cameras on conventional displays and complain that the shadows are noisy. It's true, when we look at the unprocessed signal, but it's a necessary side-effect of the techniques at play.

It's possibly thinking like this that makes people reach for raw, to simply increase the bit depth until there are so many digital code values available that the inefficiency of linear storage can be overlooked. Advances in electronics have made this increasingly feasible, but here in 2016, the associated storage concerns still mean that uncompressed raw, at least, still creates significant extra work.

Sony's FS5 supports S-log encoding, which may look noisy when viewed on conventional displays

What may have changed, given the new prevalence of log cameras at the low end, is the idea that 8-bit is enough. Eight was never enough for log, anyway, because recovering a normal-contrast image from something stored that way requires the addition of a lot of contrast. Adding that much contrast to an 8-bit image is a risky business from a quantisation noise (banding, contouring) point of view. But it may also not be enough for even quite high-contrast encodings, such as the Rec. 709 monitoring standard that's traditionally been used as a starting point for encoding HD pictures. Even 10-bit is frequently not held to be sufficient if we're discussing HDR images.

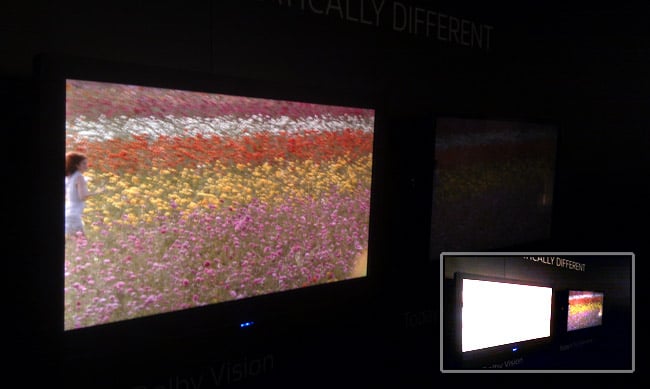

Dolby's Vision HDR standard (shown, in the inset, against a conventional display) requires greater bit depth

Quite apart from that, there's concerns over the fact that sensors have improved quite noticeably. It might not quite be the case that all cameras have grown another stop of dynamic range since 2013, but it may be close to that, especially among more affordable devices. What that requires in terms of additional bits is not straightforward, as this article should have made clear. Even so, if, in 2013, we were happy to advise that 8-bit was enough for conventional shooting, that advice may now be worth updating.

Tags: Production

Comments