I always try to take existing trends and push them to their limits. It doesn’t always point to the way things ultimately turn out, but it does at least show up a few possibilities.

Because we work in the video business, I often apply this thought process to cameras. After all, cameras have been through some massive paradigm changes in the past couple of decades (like, you know, they don’t use film any more), and there’s no reason why this era of intensive change shouldn’t continue, or even (as Raymond Kurzweil would have us believe) accelerate.

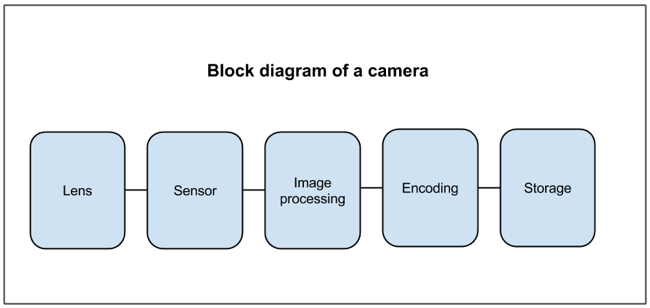

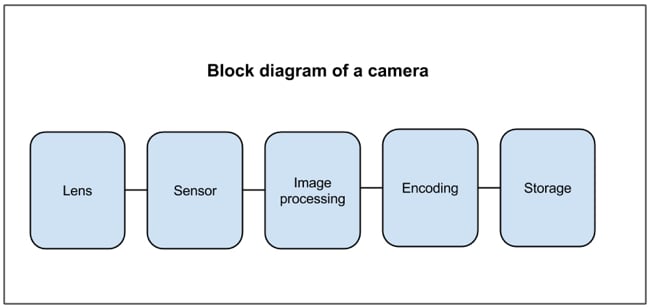

Cameras can be broken down into a pretty simple block diagram. Doing so does always oversimplify things, but it can be a useful starting place if you’re speculating about future cameras. Here’s what a typical camcorder looks like, to pretty much state the obvious.

You can always chop things up in different ways but this is a realistic high level view of what takes place in a digital camera, from front end to back end.

So, given this diagram, it’s immediately tempting to think you can build a modular camera. And some people do. It’s quite common to find in broadcast cameras that there’s a camera head and a backend. ARRI has a version of the Alexa where the front end is connected to the back end via digital fibre. Topologically, it’s as if the camera was in one piece, but physically, the lens and the sensor can be any distance away.

Apertus is building a completely modular camera. Each part of the block diagram (theirs is undoubtedly more complicated than the one above) can be made by a different manufacturing party.

And of course, many cameras are modular anyway if they have interchangeable lenses. It’s also quite common these days to attach an external recorder to the HDMI or SDI output in order to capture an uncompressed image that can be superior to the internally recorded one.

Arguably, Blackmagic built its recorder and then put a lens and sensor on it later. This may not have been literally true but the company released the Hyperdeck Shuttle, a portable recorder, and then the Cinema Camera, which almost certainly contained a lot of the technology that had been tried out in the shuttle.

But there’s one combination that I’ve been thinking about and I can’t decide whether it’s a good idea or not. It’s the idea that lenses should come with their own sensors and signal processing.

Now, in many ways, this sounds wasteful and inefficient. After all, sensors can work with a variety of lenses. That being the case, surely it’s better to have a single sensor to which you can attach multiple lenses?

There’s just one big reason why it might be worth breaking this paradigm, and that is that the sensor and the digital signal processing that follows it could be made to match the lens perfectly. There would also be no possibility of dirt or dust getting on the sensor.

Digital signal processing can be used to correct distortions in the lens and it works better when the lens is known to the camera (or, more accurately, the software) in great detail. This is much more likely to be the case when the software and sensor are completely matched to the lens.

So, is this a good idea? Possibly not. After all, it would make lenses more expensive, and, anyway, wouldn’t this essentially make lenses functionally equivalent to a camera, just without recording facilities?

You see, this is the essence of the problem. Once you start pulling a complete camera apart, it’s very hard to define what you have left. While packaged lenses, sensors and DSP do make sense in that they can optimise the performance of a single lens, perhaps the best answer is for the lens to give enough information to a more conventional camera to be able to apply the optimal signal processing to correct the optics and the colour.

I also think that by splitting the camera into parts, we’re missing the point slightly, and that point is that it’s quite possible that what makes a camera a good one is the way that all the parts of the block diagram are actually put together by the camera maker.

Tags: Production

Comments