Sound strange? Read on: Deconstruct a typical video editor and you’ll see a glimpse into the future.

Some people reading this were born after the arrival of computer based video editing. Unfortunately I’m not one of them. When I was born, digital audio was only available as a stack of punched cards (I’m not joking - early digital synthesis experiments were rendered on computers that took a week to output a simple, raspy tune, and in order to do this, they had to ingest a six inch high pile of cardboard cards with holes in them).

But I think it’s often easier to understand current (and future) technology if you’ve been through a few generations of it yourself.

When you think of an NLE, I’m pretty certain that you think of a computer, which is, of course, not unreasonable. Until about a decade ago, if you bought an Avid, that meant that you were buying a computer.

For some time now, when you buy an NLE, you’re actually downloading some software, which still demands a reasonably powerful computer, but you don’t necessarily think of the NLE and the computer as the same thing. You may even run the same software on two or more machines: a desktop and a laptop, for example.

Exploding NLEs

So the strong link between an NLE and a physical computer is already breaking down. But, in my view, you haven’t seen anything yet.

And that’s because NLEs are exploding: not literally, but conceptually. Let me explain what on Earth that means.

You may not be used to thinking about it like this, but NLEs are, essentially, databases. Each clip has certain properties and relationships to other clips, and that’s what the database keeps track of.

One of those properties might be the position of the clip on a timeline. Others might be the clip’s in and out points. Your NLE’s timeline view is just a database “report”. In other words, it’s a readout of selected and filtered contents of the database, formatted in a specific way.

The reason I’m going to such lengths to explain all this is because NLEs - and indeed entire post production workflows - are about to make a big leap into space: not necessarily outer space, but the space, indoors and outdoors, that surrounds us.

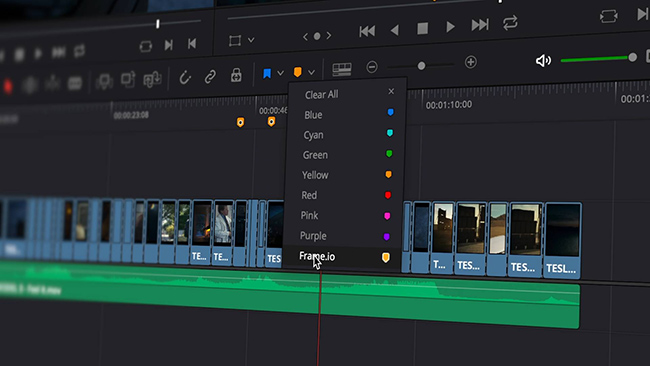

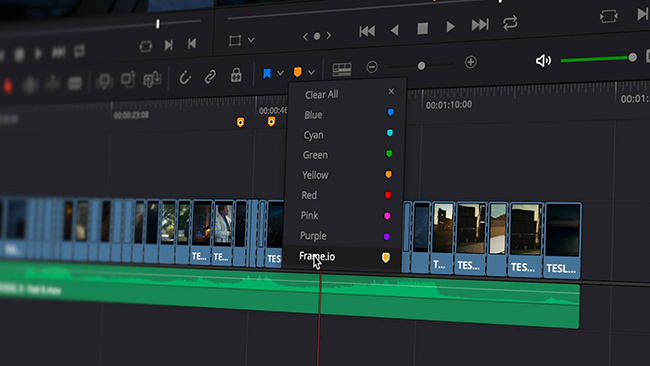

Integrations of services like Frame.io inside NLEs brings seamless working through the cloud one step closer

Global data

Keep in mind the idea that NLEs are databases. Strictly, it doesn’t matter where that data is. Until now, it’s probably been in the form of video files on a local hard drive, or, at a push, on a network drive in your building. A slightly more advanced way to work is collaboratively, with multiple users on a project, each working on a different aspect of it, but with access - as if it were on their own computer - to the same files.

A natural extension of this is for these multiple users to be in different places: maybe in the same town, or even across the world. Essentially the topology would be the same.

These “work anywhere” arrangements usually rely not on hyper-fast broadband, but on the production - automatically - of small proxy files, which are tiny in comparison to the original media, but, thanks to clever compression, still look good enough to edit with when they’re 100th the size. At the end of the edit, these proxy files are replaced with the full resolution ones automatically in a process called autoconforming.

This is a very effective way to work, and there’s nothing wrong with it. We can assume that it’s going to stay around for a very long time, because that’s how long it might take for the Earth to be blanketed in 10 Gigabit wireless broadband.

But well before then, parts of the planet WILL be covered in very serviceable, fast, low latency 5G.

It’s early days yet for this technology. A lot of it depends on virtually everything being 5G capable. But the important thing to understand about 5G is that it is a Software Defined Network, which is more of a concept than a thing it itself, but a very important one nevertheless. It means that 5G isn’t just about cellular. It will flexibly incorporate WiFi and Satellite connectivity. It will include machine-to-machine and vehicle-to-vehicle. Essentially it’s a way to orchestrate and conduct the plethora of connectivity platforms that will surround us very soon.

And it means that the NLE can start in the camera. Literally, your shots will land on your timeline at exactly the same time as your camera’s storage. That’s if cameras need storage any more!

And this is why industry gurus like Michael Cioni are moving to cloud platforms like Frame.io.

In the future, your timeline will look the same, but the clips populating it could be anywhere in the world.

Tags: Post & VFX

Comments