Advances in compression, rendering, and head worn displays are combining to finally bring true 3D, multiviewpoint or volumetric video to the mainstream. The revolution in spatial computing is in the early days, but it is on path to supersede the over a century old flat (i.e. 2D) boxed video format.

While tech titans Meta and Apple are pushing photoreal spatial computing software to funnel content and users toward Quest 3 and Vision Pro there’s a growing ecosystem of developers tackling all aspects of the pipeline.

Heard about Gracia? Last autumn the Delaware-based startup launched its dynamic volumetric VR technology which could be a game changer. Gracia allows users to dive into environments with moving volumetric scenes. It has released a few standout clips on its app (available on the Horizon Store for Quest 3S and Quest 3 and on Steam for PC users) including a ballet dancer, a chef dicing salmon, and a sparring boxer that you can ‘walk’ around in 6 degrees of freedom (6DoF). The scenes can also be rotated, zoomed in and out, and slowed down.

Streaming these clips using a gaming PC and compatible VR headset does require some serious internet bandwidth though, upwards of 300Mbps, but the company says it is working on making volumetric capture and streaming easier and more accessible.

Notably, Gracia uses an AI technique called Gaussian Splatting to capture and render real-world objects, people or entire scenes from any angle. As outlined in this tutorial, Gaussian Splatting is a form of Neural Radiance Field (NeRFs) or photogrammetry, used to generate a 3D representation from a video or set of photos, capable of synthesising views from any perspective.

In a preview to the app’s launch, UploadVR noted that Gracia claims its specific Gaussian implementation is faster than "any other technology on the market", which is how it can run on Quest 3 standalone without a PC – “albeit at a noticeably lower resolution”.

The current focus of the platform is stills which creators can generate using freely available Gaussian splat smartphone apps like Luma to upload to Gracia. But the next frontier is volumetric short video clips, which Gracia will handle using in-house tech.

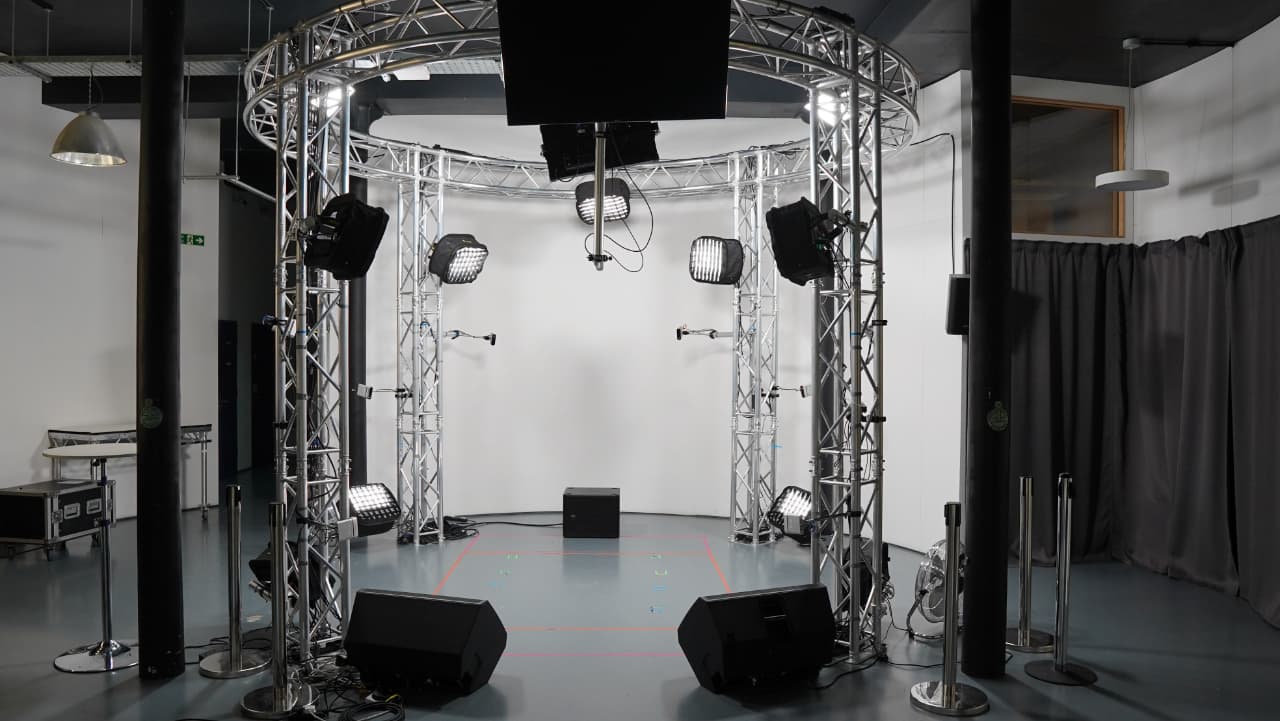

UploadVR notes that at least 20 GoPro cameras will be needed to capture volumetric video using Gracia and that this would require a dedicated studio. Training a video takes around two minutes per frame “so this is currently a very expensive and time-consuming process,” it said.

Meta's Horizon Hyperscape app also offers photorealistic volumetric scenes which it describes as “digital replicas” scanned using mobile phones (processed in the cloud) which UploadVR believe relies on Gaussian splatting to scan and render.

All these demo videos are short, just a few seconds long, and that’s because the stream is data heavy – often comprising multiple GBs.

The importance of compression

Compressing the stream is where London headquartered compression specialist VNova looks to score. Having acquired the requisite XR technology from Belgium developer Parallaxter in late 2023, VNova released a toolset and demo content in late 2024 targeting what it believes is the latent multi-billion dollar industry for streamed 6DoF entertainment.

The first couple of titles showcasing what’s possible are a pop promo featuring Albanian singer and X Factor winner Arilena Ara, and a short film called Sharkarma both produced by its own content division V-Nova Studios available via Steam.

Its target market is Hollywood and it’s already applying the technology on archive content such as How to Train Your Dragon owned by DreamWorks.

The company claims its PresenZ tech to be a “revolutionary compressed volumetric media format that transcends previous XR limitations offering viewers unprecedented freedom of movement within pre-rendered virtual environments.”

Like Meta and Gracia’s solution the assets need to be pre-rendered. V-Nova CEO, Guido Meardi, says one frame of a typical Hollywood VFX movie would take three to seven hours to render but once that’s done (and you’d only need to do it once) PresenZ makes it possible to compress and stream at 25Mbps using the existing MPEG-5 LCEVC compression scheme (which is based on V-Nova algorithms).

What about live volumetric video though? Meardi is dismissive of this given current cost limitations in processing power; “You’d need something of the scale of a NASA moon landing.”

Free-viewpoint volumetric video

But another UK startup is on the case. Condense Studio, which has close links to Bristol University, also uses NeRFs as the basis for proprietary compression algorithms it claims are among the most optimised in the world.

Its Volumetric Fusion stitches video from 4K camera array and streams the video in realtime for VR presentation. The goal is to make high-quality volumetric video technology more accessible and scalable, with the potential of transforming the way we deliver immersive content to audiences.

“One of the benefits of neural representations is their compression efficiency,” explains Chief Scientific Officer and Co-Founder, Ollie Feroze. “We plan to leverage this to transfer the computationally intensive fusion process (the dynamic creation of 3D meshes and textures) from local hardware to the cloud.”

He says this involves designing high-bandwidth protocols for rig-to-cloud data streaming and creating a fault-tolerant, scalable pipeline. “By leveraging cloud infrastructure, we aim to enhance the scalability, reliability, and cost-efficiency of volumetric video processing.”

Shortly after launch in 2020 the company caught the eye of BT Sport, which was then a world leader in innovating live video technology. The two worked together to explore ways of delivering live AR/VR sports experiences, such as boxing matches, to viewers at home enabled in part because the Condense technology is not only portable (no dedicated studio required) but operates using fewer than normal cameras.

While there were problems with the boxing idea, including practical ones such as proper placement of cameras around the ring, the company’s target live events market continues to build.

Since 2023, Condense has produced several live and virtual gigs with artists such as Gardna, Charlotte Plank and Sam Tompkins streamed into BBC Radio 1’s New Music Portal. The BBC’s investment arm BBC Ventures has even invested half a million sterling in the Bristol company’s bet on immersive live events.

“It’s difficult with a nascent tech since you're having to educate the market as you build,” says cofounder Nick Fellingham. “Getting solid content partnerships is the first step to scale but it is an inevitability that this kind of video will become more and more prevalent. Volumetric is better than conventional video. Users feel more involved. It gives you a sense of presence. It doesn't matter if it's inside a game, or inside a headset, volumetric makes you feel closer.”

tl;dr

- Advancements in volumetric video and compression technologies are paving the way for more immersive 3D content, potentially replacing traditional 2D video formats.

- Companies like Gracia, V-Nova, and Condense Studio are leading this revolution by developing innovative solutions for capturing, rendering, and streaming volumetric videos, making them more accessible and scalable.

Tags: Technology

Comments