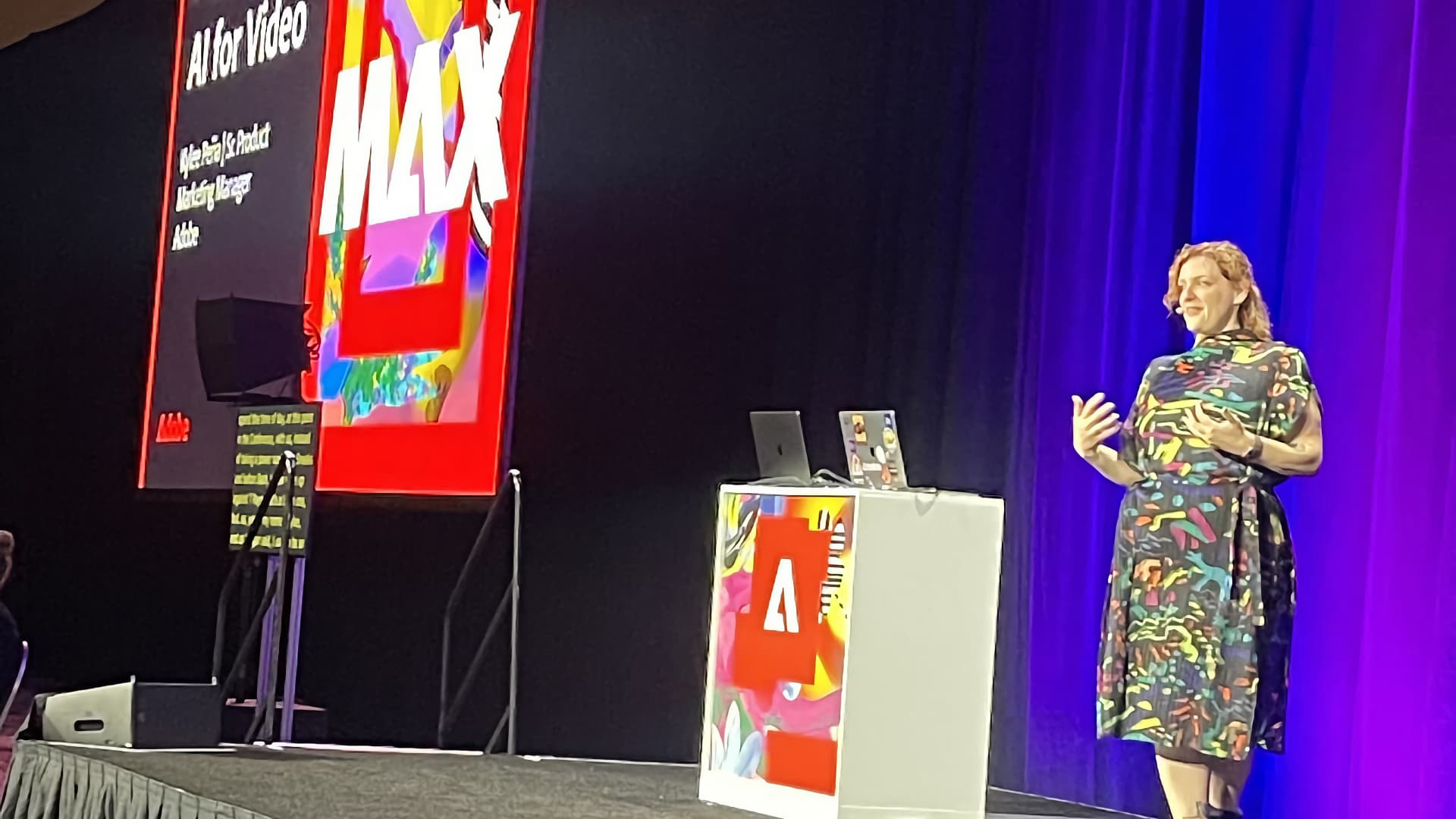

At Adobe MAX we had a one-on-one discussion about all things Generative AI with Kylee Peña - Product Marketing Manager, Pro Video for Adobe.

One of the biggest announcements on Adobe MAX 2024 day one was that the Generative Extend tool was now available for testing within the Premiere Pro beta. By leveraging the company’s Firefly AI model engine, you can now seamlessly extend video and audio clips right in your Premiere Pro timeline.

Following the announcement, we caught up with Kylee Peña—Product Marketing Manager for Pro Video at Adobe—on the show floor to gain insight into this, as well as Adobe’s broader approach to AI in the creative workflow.

1. What is Generative Extend?

Adobe’s new Generative Extend feature allows editors to add additional frames to their video clips (up to 2 seconds) and audio clips (up to 10 seconds.) “It lets you seamlessly add a few more frames for timing, for transitions, even for mood and tone,” Peña explains. “Extending sound effects that kind of get cut off as well. There’s so many little use cases that just help you with the timing of your story and to let you edit the way you want. It’s just another tool in the toolbar that you can pull out.

“In the past, you might have said, ‘I need a few more frames, so I’m gonna use a frame hold’. Sometimes that still might work for you, but Generative Extend gives you those other options.

“It is powered by the Firefly video model, which is designed to be commercially safe as well, which is really important.”

2. Adobe maintains commitment to creatives

You can’t talk AI without addressing the (prompt generated) elephant in the room; is AI replacing human creativity? Peña wanted to be clear on this front that Adobe’s focus is on empowering creatives rather than rendering them obsolete.

“Adobe is all about creatives," she said. "A lot of us that work for Adobe are former or still creative professionals. I was an editor for a very long time, and I want to just give people more tools to edit and create the way that they want to.

“It’s not about replacing anything. It’s not about rendering someone obsolete. It’s about choice and flexibility with your storytelling so that you can move on. Our AI tools have been a part of Premiere Pro for 10 years—Enhanced Speech Editing, Auto Color. These are all things that you can also dig into and adjust and finesse, but they’re also things that just help you get to a better starting point in a lot of situations as well.

“It’s about reducing that time spent on things that you really don’t want to do. You know, I didn’t become an editor because I wanted to be a media manager or I want to be troubleshooting. I wanted to tell stories. So that’s what we’re trying to do, is enable people with AI-powered tools and other tools to do just that.”

3. Bridging the gap between pros and newcomers

The line between professional and non-professional video creators is increasingly blurred, and Adobe acknowledges this shift by designing features that cater to a broad spectrum of users.

“We design our features in a way that makes it easy to understand, accessible to people that are coming up and learning,” Peña notes. “At the same time, we’re designing those same tools to be faster to access or easier to access for professionals.”

She highlights tools such as the Properties Panel, and audio updates with the Audio Category Tagger, which provide contextual assistance. “You click on a clip, and contextually, it gives you the things that you’re most likely to want to use for that clip,” she explains. “That’s good for pro users because that’s a few fewer clicks. But for new users, it puts something front and center and gives an indication of where they should maybe be thinking about making selections.”

By making complex processes more intuitive, Adobe aims to support users at all skill levels. “It’s about reducing that time spent on things that you really don’t want to do,” Peña says. “We want to enable people with AI-powered tools and other tools to do just that.”

4. Commercially safe AI and Content Authenticity

With AI’s influence on intellectual property raising concerns, Adobe is taking deliberate steps to ensure its AI models are commercially safe. The Firefly video model, for instance, is trained solely on licensed and public domain content to prevent any infringement issues. “We don’t want people to have any worry that what they’re generating might cross into someone else’s IP,” Peña stresses.

“We’re not going to mine the internet. We’re going to compensate people. We’re going to do right by creatives because we are creatives, and that’s what we deserve.”

In addition to safe training practices, Adobe is promoting transparency through initiatives like Content Credentials, which embed metadata into content to indicate how it was created, including the use of AI. “You saw in the keynote this morning, Content Credentials and Do Not Train credentials,” Peña notes. “Those are all part of what we are helping develop with the Content Authenticity Initiative, being able to offer provenance and transparency around how something was created and if AI was used.

“We want to do right by creatives by giving them the choice.”

5. Beta testing and future features

While the Generative Extend feature currently has limitations—only supporting up to 1080p resolution, 16:9 aspect ratio, 30 frames per second, and stereo or mono audio—the company is eager to refine and expand its capabilities based on user feedback.

“We have to start somewhere, but our goal is to remove those limitations as we go on,” Peña explains. “The feedback that we get is really critical to helping us understand how we can remove those so that when this ships into Premiere Pro, it’s part of the full pro workflow, and it’s seamless and it works.”

She also mentions that despite the current constraints, the tool performs well across various types of footage. “We’ve tested it with so much, and it works really well on a surprising number of things—landscapes, animals, even people. Enough people told us, ‘This is great. I want to see it. I want to use it right now.’ So we’re ready to actively develop with that feedback.”

Looking ahead, Peña offers a sneak peek into upcoming features. “You saw on the main stage yesterday, my colleague gave an early sneak peek at how we’re updating masking and tracking with object selection. It’s really, really cool. I’ve been using it a bit, and I can put together things so fast. It’s a lot more intuitive. We’re hoping to get that going in the coming months.”

tl;dr

- Adobe introduced Generative Extend tool for Premiere Pro at Adobe MAX, allowing editors to add extra frames to video and audio clips using AI-powered Firefly model engine.

- Adobe focuses on empowering creatives with AI tools, aiming to reduce time spent on tasks and provide flexibility in storytelling rather than replacing human creativity.

- Adobe designs features to cater to both professional and non-professional users, making complex processes more intuitive and accessible to all skill levels.

- Adobe ensures the commercial safety of its AI models, such as the Firefly video model, by training them solely on licensed and public domain content and promoting transparency through initiatives like Content Credentials.

Comments