No new hardware, but the promise of transformative technology across the line-up as Apple tries to rebadge AI as Apple Intelligence.

There's a lot to talk about following last night's WWDC 2024, so we've split this into two. To find out all about the new iOS 18, macOS Sequoia, and more, head to All the new OS upgrades announced at WWDC 2024.

A year since Apple launched its vision of the future with Apple Vision Pro, we’re back at Cupertino with a changed world in which NVIDIA stands to outstrip Apple’s market value due to its AI chips and Microsoft has returned to the top spot due in no small part to its OpenAI partnership.

WWDC24 was trailed as being all about Apple making its AI play. Running to catch-up with the competition. Almost everything was leaked ahead of time, so it was testimony to the quality of the presentation that it remained engaging over a near two hour run time with zero prospects of a shiny ‘one more thing’ device.

Apple Intelligence

The first Apple device with a Neural Engine was the A11 Bionic chip back in 2017 and ever since then it’s been an ever more important component of Apple Silicon. So while there’s an element of Apple being slow to the AI chat bot game, there’s also a deep institutional and hardware ability for AI.

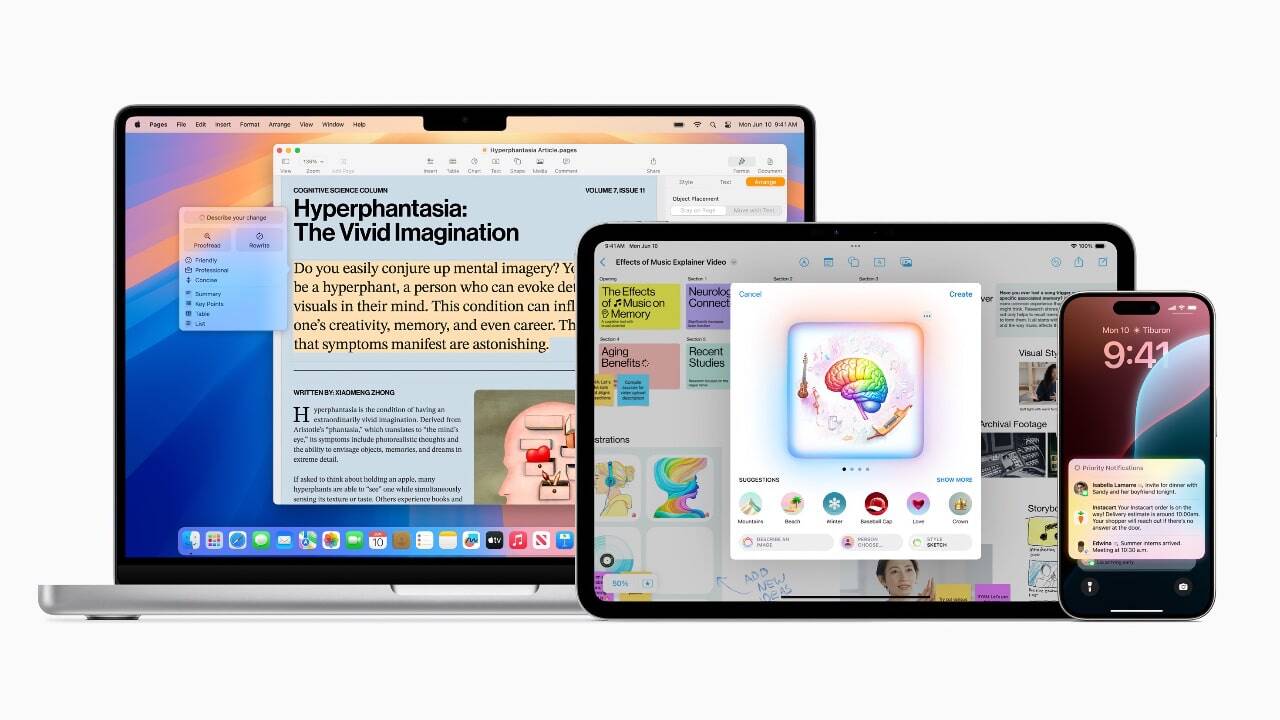

Apple goes beyond artificial intelligence with personal intelligence is the marketing line. Until now, most AI has relied upon you inputting your questions into a browser window. Apple Intelligence is about taking all the knowledge it already has on your device and using this vast array of information to serve you. Apple uses large language and diffusion models with an on-device semantic index to pull in relevant personal info as needed. On-device processing means Apple can be aware of personal data without collecting it.

Apple Intelligence relies upon M-Series Apple Silicon and A17 Pro - the chip currently exclusive to iPhone 15 Pro and Pro Max which offers double the compute power of the previous generation. If needed, it can reach out to an Apple server with private cloud compute resources. The servers run on Apple Silicon using the same Swift programming language used for iOS. Apple Intelligence will refuse to link to any server unless security verified.

Apple Intelligence in action

In the Notes and Phone apps, users can now record, transcribe, and summarize audio

Using Apple Intelligence, new Siri will be more natural, more relevant and more personal. A demo showed Siri intelligently interpreting a sentence requesting weather but with the location being revised. No awkward pause and stumble from Siri. Moreover the next question was answered with contextual knowledge of the preceding question. You’ll also be able to type to Siri, double tap at the bottom of screen to input a query.

Siri also has awareness of what’s on screen so you can ask for an address in a Message before you to be added to Contacts. Building on Apple Intents, the frameworks for Apple’s Shortcuts automation feature, Siri can take content from one app to another. You can ask Siri to find a picture of your driving license and input it into a form.

One of the most interesting demos showed Siri able to to answer a question about how running late would affect making a daughter’s evening play. Siri understood where you are, where your daughter is, the distance and the timing of the play. Another example was about when mom was arriving - automatically reading messages for flight number, looking it up and seeing any updates. And for lunch ideas, not need to track back through emails and messages for past conversations - Siri knew what had been discussed and immediately retrieved it. No problem that you’d forgotten to put an event in your calendar, Siri could reconstruct it from messages.

Of course, pre-recorded demos are one thing, reality something else.

Other Apple Intelligence experiences included Rewrite, a new feature offering grammar check, plus the option to change the tone of an email - more professional / friendly / concise; Image Playground, a distinctly Apple spin on the AI craze for generating images from text prompts; and Clean Up for Photos does the thing Google’s been doing for years in removing unwanted elements from photos.

All of these features are running on device but can call on Apple’s own servers. However, there is a world of information outside your device and Siri comes with the option to call on ChatGPT 4.0. If you accept the prompt, your message or photo is uploaded into ChatGPT for its systems to offer up a response. If you want a bedtime story for your children, no need to open a window or app - you just need to ask Siri.

In future, Apple will add other AI models, such as Google’s Gemini, but for now we wait until the summer for its features to begin appearing in American English.

Siri can now take hundreds of new actions in and across apps, including finding book recommendations sent by a friend in Messages and Mail

Conclusion: Is Apple now really Intelligent?

Apple’s AI play wasn’t distinguished by any single stellar feature so much as a cohesive vision of transforming its devices and services via on-device processing.

It has, so far, been blessed by over ambitious competitors such as Humane’s overheating Ai Pin and Rabbit R1’s ‘barely reviewable’ first iteration, while more established companies have stumbled. Google invented much of the technology OpenAI built on, but was slow to exploit it and Microsoft’s Qualcomm Elite line-up of ‘MacBook killers’ headlined a ‘Recall’ feature that was almost immediately pulled due to poorly thought out security.

Apple has put user privacy and security at the heart of its messaging for decades, it’s a foundational building block of its vertically integrated eco-system. This trust opens the door to Apple using on-device processing to scan all your messages, your contacts, photos and more for a secure, personally tuned AI assistance to transform how your Apple device serves you.

It’s a great opportunity for Apple, one that OpenAI certainly didn’t hesitate to partner with, but not without risk for some future security breach and simply misfiring as Siri has repeatedly done for over a decade now. It’s also a slow burn with developer betas (available now) and public betas (July) lacking key features such as Mail categorisation and Apple Intelligence itself not due to begin appearing until later in the year.

Tags: Technology AI Apple

Comments