Nvidia and Intel developing their highest grade chips to drive autonomous cars will give a boost to the seemingly inevitable concept of turning vehicles from transport cages to multimedia zones. What the tiny supercomputer can also be used for is perhaps more important.

After trailing the chips last autumn and announcing plans to pop them into a self-driving prototype called BB8 (after Star Wars’ sentient robot), Nvidia has teamed with German firm Bosch to build a car with AI smarts.

Nvidia founder and CEO Jen-Hsun Huang said Bosch would build automotive-grade systems for the mass production of autonomous cars — expected to begin in five years time.

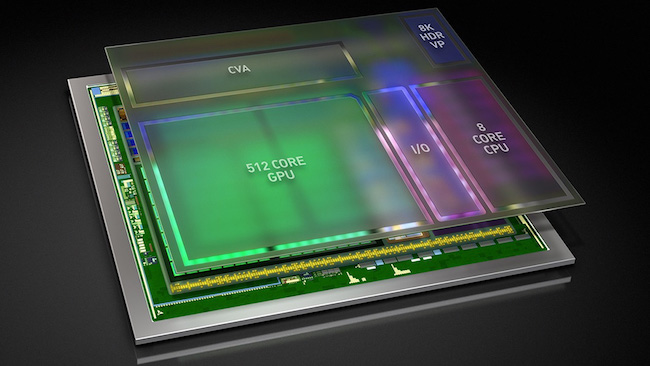

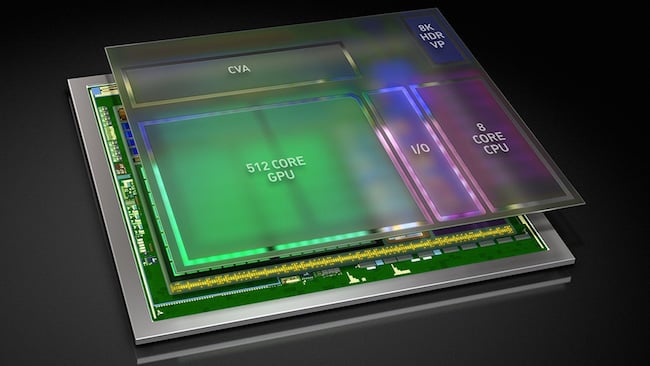

The chip powering this is called Xavier (after the X-Men character’s mind-bending powers) and is capable of 20 trillion operations per second from its seven billion transistors while drawing just 20 watts of power.

“This is the greatest [System on a Chip] endeavour I have ever known and we have been building chips for a very long time,” Huang said, announcing the development last year.

Now, as Marvin, Douglas Adams’ character from The Hitchhikers Guide to the Galaxy, might have said: “Here I am, brain the size of a planet and you ask me to drive to Sainsbury’s.”

So what else can Xavier do? Well, it is rather good at computer vision. It has to be in order to recognise objects, send them back to the cloud and make instantaneous decisions about traffic or road conditions.

Xavier contains, for example, a computer vision accelerator which itself contains a pair of 8K resolution video processors. They are HDR, of course.

Aside from self-driving cars, computer vision or smart image analysis is the basis of gesture-based user interfaces, augmented reality and facial analysis.

The Computer Vision program at rival chip maker Qualcomm is focused on developing technologies to enrich the user experience on mobile devices. Its effort target such matters as Sensor Fusion (no, we’re not sure either), Augmented Reality and Computational Photography.

As part of the effort, Qualcomm says new algorithms are being developed in areas such as advanced image processing and robust tracking techniques aimed at “real-time robust and optimal implementation of visual effects on mobile devices”.

Computer vision can, for example, be used to enable AR experiences where an object’s geometry and appearance is unknown. Qualcomm has developed a technology it calls SLAM for modelling an unknown scene in 3D and using the model to track the pose of a mobile camera in real-time. In turn, this can be used to create 3D reconstructions of room-sized environments.

Website Next Big Future runs this math: 50 Xavier chips would produce a petaOP (a quadrillion deep learning operations for 1 kilowatt.) A conventional petaflop supercomputer costs $2-4 million and uses 100-500 kilowatts of power. In 2008, the first petaflop supercomputer cost about $100 million [http://www.nextbigfuture.com/2016/11/nvidia-xavier-chip-20-trillion.html]

Nvidia is renowned for the GPUs which power photo-realistic computer graphics. Now the GPUs also run deep learning algorithms. Sure, we can have autonomous cars, but I wonder what a machine running AI and CG can do? In any case, I want and expect a Xavier in my smartphone soon.

Tags: Technology

Comments