The incredible rise of the GPU

The incredible rise of the GPU

Guest author Rakesh Malik tells a short history of Intel and how graphics processing units (GPUs) came to steal the thunder of the giant chip maker.

In the early days, Intel's 8086 and 8088 processors were fairly primitive compared to their contemporaries. Their main advantage was cost, but Intel was far from the powerhouse that it is now.

As the market for personal computers grew, so did Intel; like Microsoft, Intel made their processors available to other computer manufacturers besides IBM. Eventually, Intel grew large enough to buy back its stock from IBM and go its own way.

Compared to the far costlier RISC machines that cost tens of thousands of dollars, the 386 was anemic when it came to performance; it didn't even include floating point arithmetic support. The options were to implement floating point math in software using the integer arithmetic unit, which was slow, or to purchase an 80387 floating point co-processor, which was expensive.

Fabricating leadership

Over the years since then, Intel has continued to grow, not only in market, but also in technical leadership. Modern x86 processors have the advantage of the most advanced fabrication technology in the world, and extremely advanced architectures to go with them.

As a result, x86 processors now dominate personal computers, workstations, and servers, gaming consoles, and even Apple's personal computers. Most of Intel's competitors in the x86 market have failed, unable to keep up with Intel's fabrication technology or performance. Intel's vast economy of scale has enabled them to engage in price wars with competitors, keeping them down long enough for Intel to retake the lead. Even during its heyday when the Athlon64 was at its peak, AMD barely made a dent in Intel's sales or profit margins.

In spite of selling in quantities so vast that it dwarfs the entire x86 market, even the ARM family has thus far had next to no impact on Intel's margins, either.

And yet, Intel's margins are starting to diminish.

GPU free for all...

The first graphics adapters were little more than frame buffers with hardware optimized for window operations. They basically copied blocks of memory from one place to another so that the host processor didn't need to. They gradually gained features like hardware cursors and double buffering, and eventually Matrox-pioneered, affordable Gouraud shading implementations in hardware, making performant, interactive 3D graphics a possibility on personal computers.

3D games drove the market for 3D graphics accelerators in personal computers, which in turn generated profits, leading to increasingly more advanced graphics capabilities. For a time, it was a free for all, with companies developing graphics processors and proprietary software development kits to go with them. 3D software had to be custom developed for each graphics processor, so portability wasn't an option. Few game developers could support every option available, so they had to pick and choose based on the ones that made their games look and perform their best.

Consumers suffered the most. A graphics card that was great for Tomb Raider might not run Quake at all.

DIrect3D to the rescue

The graphics library free for all ended when Microsoft released Direct3D. nVidia successfully recruited some of the developers who had designed IrisGL, out of which grew OpenGL, from SGI when the graphics company hit a very difficult time in its history and nearly went bankrupt. The expertise that nVidia gained enabled them to get a jump on OpenGL support in Windows, which helped to enable the 3D graphics industry to start migrating to the x86 platform.

It didn't take long for SGI to almost completely lose the graphics market once the combination of increasingly performant host processors, robust OpenGL drivers, and performant graphics processors overtook SGI's vaunted workstations at fractions of the price.

The writing was on the wall for the RISC workstations.

That was the era during which small visual effects shops like Foundation Imaging could implement visual effects for very CG-intensive films like Babylon 5 on Amigas using Video Toasters, and not much later, Zoic with only a handful of animators provided the visual effects for the Dune and Children of Dune miniseries with LightWave on inexpensive personal computers.

Throwing shade

A major characteristic of this time was that although the graphics processors were becoming very powerful, they were also very limited. The final renders still relied almost entirely on the host processor for computing power, and software dedicated primarily to video editing couldn't take much advantage of the graphics hardware at all.

The next evolution in graphics hardware was to add programmable shader engines. These allowed programmers to implement small programs that could run on the GPU and perform moderately complex shading operations on each polygon in a 3D scene. The vertex computations remained the purview of dedicated vertex processors, which at the time weren't programmable.

The first shader engines were very limited, but that didn't last long. ATI and nVidia launched into an arms race, adding increasingly sophisticated memory infrstructure and more sophisticated and flexible shader engines.

These shader engines were relatively simple. They didn't need sophisticated memory controllers, support for file system access, thread management and so on; that functionality was built into an on-chip controller. Since the processors were small, GPU designers were able to build a lot of them into GPUs.

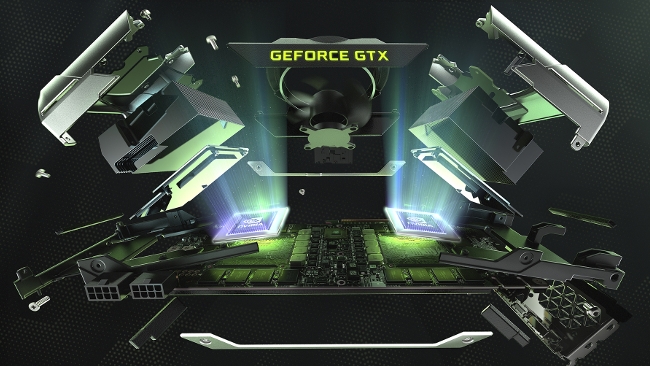

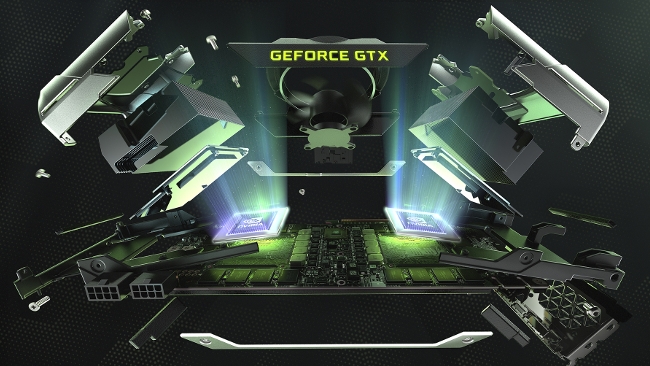

As they gained in sophistication, they also gained flexibility. Eventually, shader and vertex processors merged, becoming larger collections of essentially identical processors.

Then things started to get interesting.

GPUs as processors

Since the compute engines on GPUs could handle the entire graphics pipeline, it became possible to render realtime displacement mapping. Eventually, the GPUs grew sophisticated enough for Havok to port the Bullet real-time physics engine to the GPU.

At that point, developers were treating GPUs as co-processors. Programming languages like CUDA, OpenCL, OpenGL High-Level Shading Language, and the DirectX Shader Language allowed programmers to write portable programs for GPUs, and CUDA and OpenCL removed the graphics-specific limitations.

Now, GPUs had become essentially compute co-processors for personal computers.

Crunching the chip market

The limiting factor in gaming performance wasn't the host processor any longer, now it was the GPU.

The result was that maintaining high prices on high-end x86 processors has been increasingly difficult, especially since clock speeds aren't going up significantly, thanks to thermal limits. Meanwhile, higher performance GPUs in increasing demand.

Intel clearly sees this trend also, since they began developing a massively parallel computing platform based on stripped down and optimized x86 processors. nVidia and ATI pack their GPUs with legions of processors and rely on the programmers to write optimally parallel code, which most don't know how to do. Intel is relying on a smaller number of cores and an easier programming model to enable programmers to get higher performance with less effort. So far the jury's out on how well this will work out in practice, but the fact remains that even Intel is looking for new markets where they can command high margins, while its x86 host processor family still has high enough margins to keep Intel's revenue stream alive.

The consumers clearly are gaining from this, and in more ways than just more powerful computers, more beautiful and immersive games, 4K video editing, and the like. This trend is also making the x86 less important for a personal computer, thereby opening the door for companies like nVidia to move upmarket with increasingly sophisticated ARM processor designs.

Tags: Technology

Comments